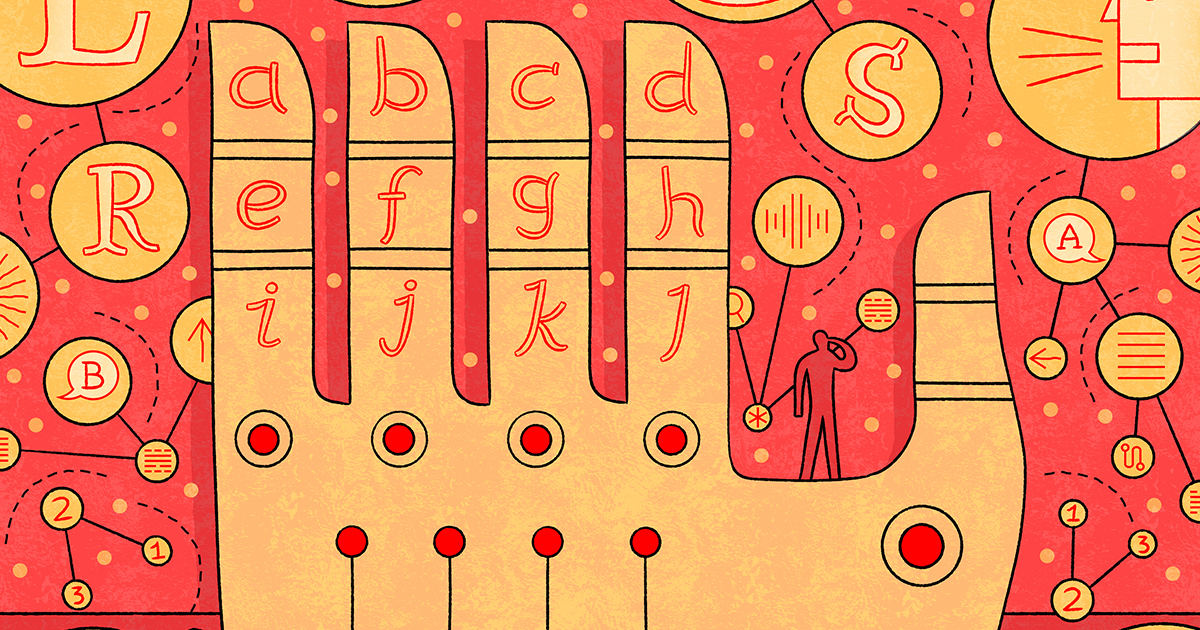

When Computers Learned to Talk Back: A Short Story of Modern NLP

Natural-language processing (NLP) has been around since the 1940s, but the past eight years rewrote its rule-book. Below is the journey—told through the “aha!” moments that researchers themselves recall, and explained in everyday language.

inspiration:

1. 2017 – The Transformer Surprise

Epiphany: “Attention Is All You Need” introduced the transformer—a neural-network design that ignores grammar rules and simply learns statistical patterns with an “attention” mechanism.

- Why it mattered: It proved you can treat sentences like long strings of numbers and still translate, answer, and summarise astonishingly well.

- The lesson: Clever design mattered less than people thought; raw pattern-matching at scale could beat handcrafted linguistic tricks.

2. 2018-19 – BERT and the Benchmark Gold Rush

Epiphany: Google’s BERT (and OpenAI’s early GPT) smashed every standard test set. Papers about “why BERT works” flooded conferences.

- Researchers raced to create harder benchmarks, but progress kept leap-frogging them.

- The lesson: Once a model can read billions of sentences, new test scores rise mainly by making the model bigger, not by inventing new theories.

3. 2020 – GPT-3 and the First Identity Crisis

Epiphany: GPT-3 showed that just scaling up a transformer could write code, draft essays, and solve logic riddles after a few example prompts—capabilities no one had predicted.

- Graduate students felt months of thesis work could now be replicated “in a weekend.”

- Ethical researchers warned of bias and energy cost (“Stochastic Parrots”), sparking the field’s first open civil war: Does bigger data lead to real “understanding,” or just better mimicry?

- The lesson: Size unlocks emergent behaviour—good and bad—and academia suddenly trailed industry resources.

4. End-2022 – ChatGPT: The Asteroid Strike

Epiphany: ChatGPT packaged an LLM in a user-friendly chatbox. Overnight it retired whole sub-fields (e.g., question-answering systems) and flooded media, classrooms, and boardrooms.

- Conferences filled with “prompting” papers; many traditional NLP topics felt obsolete.

- Students, professors, and journalists all asked the same question: “What’s left for humans to do?”

- The lesson: An intuitive interface can matter more than another accuracy point—it changes who uses the tech and what they expect.

5. 2024-25 – The Aftershocks

Epiphany: Two parallel realities emerged.

- LLM-ology – studying proprietary giants (GPT-4o, Gemini, Claude) largely via paid APIs.

- Open LLM labs – outfits like Allen AI’s OLMo rebuilding smaller, transparent models so science can stay reproducible.

- New divides centre on computing power, openness, and global relevance (e.g., making models that know a key-lime pie isn’t meaningful in Hindi textbooks).

- The lesson: NLP is no longer a quiet sub-discipline—it sits at the crossroads of massive capital, public policy, and multilingual inclusion.

So … Was This a Paradigm Shift?

- Yes, in practice: A single chat interface now accomplishes tasks that once required dozens of bespoke programs. The unit of work in NLP shifted from “design a model” to “write a prompt.”

- Yes, socially: Researchers must navigate billion-dollar incentives, media scrutiny, and ethical fallout; the field can’t hide in journals anymore.

- Maybe not, in theory: The core idea—learn from large text and transfer that knowledge to new tasks—remains what it was a decade ago. Only the scale and consequences exploded.

Take-Home Messages for Readers

- Scale beats cleverness—feeding more data and compute to a blunt architecture can unlock abilities that look like reasoning.

- Interfaces matter—ChatGPT’s chat window, not a new algorithm, triggered the public tipping point.

- Debate is healthy and ongoing—from “statistical parrots” to open-source counter-movements, the community is still arguing—and learning—about meaning, bias, and control.

- Your language is next—future progress must move beyond English benchmarks to culturally aware models, or large parts of the world will be left out.

- We’re still writing the ending—whether LLMs are dead-ends or stepping-stones to deeper understanding is an open question. What’s certain is that NLP has forever merged with mainstream AI—and with society itself.

If you’ve ever asked a chatbot for help, you’re already part of this story. The next chapter will depend not just on algorithms, but on the choices researchers, companies, and everyday users make from here on out.