Table of Contents

Functional information is context-bound because “function” is always a question posed by an observer.

In large language models, attention is the machinery that answers that question on the fly, sifting through combinatorial possibilities to preserve only the bits that matter for the present goal. Recognising this parallel helps demystify both biological evolution and transformer intelligence: in each, selection acts as an interrogator, and information becomes “functional” only relative to the answer sought.

What drives the rise of complexity in nature? A new interdisciplinary proposal suggests that functional information may hold the key. According to mineralogist Robert Hazen, astrobiologist Michael Wong, and colleagues, complexity in the universe increases over time by a law-like process analogous to the second law of thermodynamics. In their view, evolution is not exclusive to biology but a universal phenomenon: any system with many possible configurations, subject to selection for performing a function, will evolve towards greater complexity. This bold idea – dubbed the “law of increasing functional information” – implies that complex life and even intelligence might emerge wherever conditions allow, rather than being a freak accident. To understand this proposal, we must first ask: what exactly is functional information?

From Classical Information to Biological Function

Classical information theory, pioneered by Claude Shannon and others, measures information as the raw content of a sequence – essentially the length of the message or how compressible it is, without regard to meaning or function. For example, a long DNA sequence with no repeating patterns has high Shannon information (and Kolmogorov complexity), whereas a repetitive sequence has lower information. However, biology confronted scientists with a puzzle: many different sequences of DNA or protein can perform the same function in a cell. Traditional measures count each unique sequence as separate information, missing the fact that life cares about what sequences do, not just their form. In other words, biological complexity is tied to function – the meaning of the sequence – something classical theory does not capture.

Jack Szostak, a biologist, introduced functional information in 2003 to address this gap. Szostak argued that complexity should be measured not by the literal length or randomness of a sequence, but by how rare it is to find a sequence that performs a given function. This was a shift from thinking about information as mere data, to thinking about information in context – specifically, context of a defined function.

Defining Functional Information

Functional information (FI) quantifies the amount of information required for a system to perform a specific function to a given degree. More practically, it measures how improbable it is, in the space of all possible configurations, to stumble upon one that achieves at least a certain level of function. Formally, Hazen and colleagues define functional information for a function x at performance threshold Ex as:

$I(Ex)= -\log_2 [F(E_x)]$ ,

where F(Ex) is the fraction of all possible configurations of the system that possess a degree of function ≥ Ex. This definition means that if only a tiny fraction of configurations can accomplish the function (i.e. F(Ex) is very small), then the functional information is high – reflecting a highly specific, complex requirement. Conversely, if many configurations work, the functional information is low. In short, functional information represents the probability that a random configuration will meet or exceed a specified functional performance. It ties complexity to function: a system is “complex” in this sense only to the extent that achieving a particular function is rare in the space of possibilities.

An illustrative example comes from biochemistry. Consider a short RNA strand (an aptamer) that binds tightly to a target molecule. There are astronomically many possible RNA sequences of that length. If only one particular sequence (or very few) can bind the target well, that sequence has high functional information – it encodes a very specialized function. If, on the other hand, thousands of distinct RNA sequences can all bind the target effectively, then any given one is less special (lower FI). In practice, one can estimate FI by experimental selection. Szostak demonstrated this by creating a pool of random RNA sequences and selecting those that bind a target; as he enriched for better binders through iterative mutations and selection, the functional information of the winning aptamers increased toward the theoretical maximum. In each round, it became harder for a random sequence to match the top performers, indicating rising FI as function improved. This experiment showed that evolutionary selection can drive up functional information, concentrating on sequences that are information-rich with respect to the chosen function.

It is important to note that functional information is inherently contextual. We must specify which function and what threshold of performance we care about. Change the function or the environment, and the set of effective configurations – hence the FI – will change. For example, an RNA sequence’s FI for binding molecule A will differ from its FI for binding molecule B. This context-dependence is not a flaw but a reflection of reality: information is only “functional” with respect to a defined goal or role. Unlike mass or length, FI is not an intrinsic property of an object alone, but a property of an object in a given context of function. This nuance underlies both the power of the concept and the challenges in generalizing it, as we’ll see.

Evolutionary Increases in Functional Information

One of the remarkable implications of functional information is that when a system undergoes selection for a function, its functional information tends to increase over time. In evolutionary processes, whether in a computer simulation, an ecosystem, or even chemical reactions, selection weeds out configurations that don’t work and amplifies those that do. This means the surviving configurations occupy an ever-smaller fraction of the possible space (they are the “special” ones), which translates to rising FI.

Hazen and Szostak explored this in 2007 by evolving digital organisms in an artificial life simulation. The digital “genomes” could mutate and were selected for their ability to perform computational tasks (a defined function). They observed that as the virtual organisms evolved better performance, the functional information of the system increased spontaneously. In other words, evolution naturally drove the population towards configurations that were increasingly hard to find by chance, because those configurations performed the function well. The complexity (in the functional sense) grew over time through selection.

Crucially, this principle does not apply only to biology or explicit “Darwinian” scenarios. Any system that explores many configurations and preferentially retains those that fulfill a function will accumulate functional information. Hazen and colleagues argue that this applies to chemical and physical systems too. For instance, consider the mineral evolution of Earth: in the early Earth, only a limited number of simple minerals existed, but over billions of years the number and diversity of minerals expanded dramatically (over 5,000 mineral species today) as new chemical processes arose. This can be viewed as an increase in functional information in the mineral realm. Many possible crystal structures could form from elements in Earth’s crust, but the ones that actually formed are those favored by stability or the presence of catalysts like water and biology. Hazen’s team showed that the complexity of Earth’s mineralogical record increased over time, indicating rising FI even in this non-biological system. In fact, they can quantify a form of functional information for minerals by considering that only certain combinations of elements occur as stable minerals under Earth’s conditions – those configurations were “selected” by geochemical processes from a vast space of possibilities.

Even on a cosmic scale, complexity has ratcheted up as the universe evolves. Right after the Big Bang, matter was in a very simple state (mostly hydrogen and helium). As the first stars formed, nuclear fusion in their cores forged heavier elements like carbon and oxygen. When those stars died in supernova explosions, they spread these new elements, allowing later generations of stars to produce even more elements. Over cosmic time, the periodic table filled out: from just a couple of light elements, we now have nearly a hundred naturally occurring elements. This is an increase in chemical complexity or “information” – the diversity of building blocks – enabled by the selection of stable nuclear configurations in stars. Only certain atomic nuclei are stable or can be formed by fusion; the universe preferentially contains those, just as a functional selection. In Hazen and Wong’s view, this is another manifestation of rising functional information: the universe explored many states (through star formation and destruction) and accumulated more complex, structured matter as a result.

These examples illustrate a unifying idea: evolution by selection for function is a general process that generates complexity. Biological evolution via natural selection is just one special case, albeit a particularly rich one. But the formation of minerals, the synthesis of elements in stars, the development of complex chemistry in planetary environments – all can be seen through this lens. Whenever there are many possible states and a process that “chooses” some based on some functional criterion (stability, energy efficiency, etc.), the system can evolve greater complexity (higher FI) over time.

The Law of Increasing Functional Information

Building on these insights, Hazen, Wong, and collaborators have proposed a new overarching law of nature to describe this tendency. The Law of Increasing Functional Information can be summarized as follows:

- Any sufficiently complex system will evolve (increase in functional complexity) if it consists of many components that can be arranged in a multitude of ways, and if these configurations are subject to selection for one or more functions.

In plainer terms, given variety and selective criteria, the system will develop greater order and complexity. The authors characterize the systems this applies to in three points:

- Many components, many arrangements: The system is made of numerous interacting parts (atoms, molecules, cells, etc.) that can combine in countless ways.

- Generation of variation: Natural processes (e.g. random mutations, chemical reactions, star formation and explosions) continually generate a huge variety of different configurations of those parts.

- Selection for function: Only a small fraction of those configurations “work” well – i.e. fulfill some function or survive under the given conditions – and those few are preferentially retained or persist.

Whenever these conditions are met, the law says, the system will evolve toward “greater patterning, diversity, and complexity” over time. This is evolution in the broadest sense: not just biological descent with modification, but any process where novel configurations appear and are selectively retained. Notably, this law does not require life or reproduction per se. It puts life’s evolution on a continuum with cosmic evolution.

The concept of “selection for function” is central. In biology, Darwinian fitness (survival and reproduction) is the function that shapes which variants persist. But Hazen and Wong broaden this to at least three categories of functions in nature:

- Stability – The simplest function is persistence: stable configurations tend to remain. For example, certain minerals are more stable under Earth’s conditions and thus dominate, and in stars certain nuclear configurations (like iron) are extremely stable, marking an endpoint of fusion. Stability is a kind of “function” that selects which states endure.

- Dynamic Persistence (Energy Flow) – Systems that can harness or sustain flows of energy tend to continue. In chemistry or planetary environments, reactions that can keep going (perhaps autocatalytically or with an energy source) will outlast those that exhaust themselves. In biology, metabolism is an example – a network of reactions that persists by fueling itself. So this second functional criterion is about maintaining activity over time, not just static stability.

- Novelty – Perhaps the most intriguing function is the generation of novel configurations or capabilities. Evolving systems often have a drive (or at least a tendency) to explore new states that were not accessible before. This can lead to emergent behaviors or properties. In biological evolution, novelty is seen in major innovations – e.g. the evolution of photosynthesis (organisms harnessing a completely new energy source, sunlight), the emergence of multicellular life (cells cooperating in new ways), the development of flight or complex brains. Each such novelty is a “startling new behavior or characteristic” that opens up fresh possibilities. The law suggests that the exploration of novelty is itself a functional outcome that tends to be selected for, because it can unlock new resources or niches. In short, systems that get more complicated can sometimes do things simpler systems couldn’t – and if those new things confer any advantage or persistence, they will be favored. As one researcher put it, “An increase in complexity provides the future potential to find new strategies unavailable to simpler organisms.”

If we look at nature through this lens, we see these principles in action everywhere. The universe “selects” stable atoms and star systems, which then enable new chemistry. On early Earth, stable minerals formed the substrate; then chemical cycles emerged (dynamic persistence); eventually life burst forth and began generating endless novelties (from oxygen-producing microbes to thinking animals). Each stage built on the previous, increasing the overall functional information in the system.

Robert Hazen offers an intuitive description: imagine a system of atoms or molecules that can exist in trillions of different arrangements. Only a tiny fraction of those arrangements will “work” – that is, have some useful function or stability. Naturally, those are the ones that tend to stick around. So nature prefers those functional configurations. Over time, by preferentially accumulating the rare configurations that work, the system becomes more structured and complex. This captures the essence of why functional information rises: the space of possibilities is vast, but selection carves out an increasingly ordered subset.

It’s important to clarify that this law is statistical and qualitative at this stage. It doesn’t provide a simple formula to predict how fast complexity increases or exactly which novelties will appear. Rather, it posits a general directionality in natural processes: as time goes on, and as long as new configurations are sampled and tested against some criterion of “functionality,” the maximum achieved complexity tends to grow. In that sense, it’s like an “arrow of complexity” complementing the arrow of entropy. Entropy (disorder) increases overall in closed systems, but this new principle says that localized pockets of matter can spontaneously evolve higher levels of order and function, given the right conditions and flows of energy, because selection pushes them that way. Life is the prime example of this – a pocket of decreasing entropy (increased order) made possible by energy flow and selection – but not the only example.

Major Transitions and Jumps in Complexity

While functional information often increases gradually as a system adapts, history shows that complexity sometimes jumps in sudden leaps. Biological evolution, for instance, has seen pivotal transitions where complexity soared in a relatively short time: the origin of the first cells with nuclei (eukaryotes), the advent of multicellular life, the Cambrian explosion of diverse body plans, the emergence of central nervous systems, and the rise of human intelligence. Each of these events opened up a “new level” of organization. Interestingly, Hazen and Wong’s studies found a parallel in their models: the increase of functional information was not always smooth but occurred in steps, with plateaus punctuated by rapid gains. This suggests that the major evolutionary transitions might be an expected outcome of how functional information accumulates.

How can we understand these jumps? Wong offers a helpful metaphor: during a transition, the evolving system accesses a new “floor” of possibilities, a higher level that was previously unreachable. On this new floor, completely novel configurations and functions become accessible, which could not even be imagined at the lower level. For example, before life began, chemistry was limited to what molecules could do on their own. Once self-replicating systems and metabolism appeared, entirely new dynamics (like competition, mutation, ecosystems) came into play – the “rules of the game” changed. Later, once multicellular organisms arose, single cells alone could not predict phenomena like organs or coordinated behaviors. Each major leap creates a new landscape of functional opportunities.

In these leaps, often the criteria of selection themselves shift. Early in life’s origin, perhaps the key function was simple stability – molecules that lasted longer or were more abundant tended to persist. Once metabolism and autocatalytic cycles (self-sustaining chemical networks) appeared, dynamic stability (ongoing reaction networks) mattered more than the longevity of any single molecule. Later, when organisms could move or think, completely new functions like navigation, communication, or social cooperation became relevant. Each transition thus changes what “functional” means for further evolution. Complexity builds upon complexity.

Researchers like Ricard Solé have noted that these transitions resemble phase transitions in physics – abrupt, system-wide changes where new emergent order appears. Just as water freezing into ice or iron becoming magnetized are sudden collective shifts, the emergence of life or consciousness might be seen as collective phase changes in information processing. At those critical points, “everything changes, everywhere, all at once,” as Solé puts it. This analogy supports the idea that there could be a kind of general physics of evolution, where systems follow common principles when undergoing transformation to higher complexity. Functional information provides a quantitative handle on these phenomena: a big jump in FI would signal a phase transition in the system’s organization or function.

Another profound aspect is the open-endedness of this process. With each increase in functional complexity, new functions create new “rules” and possibilities for the future. Stuart Kauffman and other complexity theorists emphasize that evolution doesn’t just find answers to known problems – it poses new questions by creating new functionalities. The biosphere, in Kauffman’s words, “is creating its own possibilities” as it grows in complexity. Three billion years ago, concepts like “predator” or “flight” or “song” did not even exist; life had to invent the prerequisites for those functions through a series of innovations. This means that we cannot predict all future evolutionary possibilities because many of them don’t exist yet – they will be unlocked by the emergence of intermediate forms and functions.

Physicist Paul Davies and colleagues have even likened this ever-expanding space of possibilities to Gödel’s incompleteness theorem. In formal systems, Gödel showed there will always be true statements that cannot be derived from the existing rules – you’d need new axioms to prove them. Analogously, in evolution, whenever life achieves a new level of complexity, it’s as if new “axioms” or rules get added (new behaviors, new interactions), expanding the phase space of what can happen. The process is self-referential: the products of evolution (new organisms, new traits) change the conditions for future evolution. This feedback is unlike non-living systems; no matter how many stars you have, they don’t change the fundamental rules of gravity by introducing new kinds of star. But life, especially intelligent life, can perform experiments, alter environments, and create entirely novel niches. Thus, while the law of increasing functional information might predict a general trend toward complexity, it doesn’t imply a predetermined path – evolution is full of creative surprises. As Davies put it, life “evolves partly into the unknown”, always venturing beyond what prior states could foresee.

In summary, major evolutionary transitions can be viewed as natural outcomes of a complexity-driven universe. Functional information tends to accumulate, but not always linearly – it can reach tipping points where a qualitatively new world is born. This helps us appreciate why things like consciousness or technological civilization seem to have appeared abruptly and rarely: they are phase changes in complexity. The new law suggests such leaps would eventually happen given the right conditions, because each step of functional information growth sets the stage for the next. If intelligence arose only once on Earth, it may be simply because once it arose, it changed the game (and perhaps precluded another separate origin on the same planet) – not because it was nearly impossible in principle.

Challenges and Criticisms

The concept of functional information and its associated “law” are inspiring, but they raise tough questions and criticisms. One issue is the measurement problem: by definition, to compute functional information you need to evaluate all possible configurations of a system for a given function. This is straightforward for a short DNA or RNA sequence in a lab experiment (where one can sample many variants), but becomes utterly intractable for complex systems. For even a single living cell, the space of possible molecular configurations is effectively infinite, so in practice $I(E_x)$ cannot be calculated exactly. Critics note that if you can’t quantify something well, it’s hard to call it a rigorous law. The functional information of most real-world systems is not just hard to measure – it’s unknowable in any complete sense, because you’d have to explore every alternative the system could take (which is combinatorially explosive).

Additionally, functional information depends on context and choice of function, which some argue makes it a bit subjective. In a non-living context, how do we decide what function to consider? For minerals, Hazen chose “persisting in a given environment” as an implicit function (stability). But one might ask: is that a universal rule or just retrospectively describing what happened on Earth? If one changes the environment or criteria, the “selected” set could differ. Detractors worry that by allowing the definition of function to vary case by case, the idea loses the crisp universality of, say, the laws of thermodynamics.

Sara Walker, who champions an alternative complexity framework (assembly theory), has expressed skepticism that the Law of Increasing Functional Information is testable in a scientific sense. What experiment, she asks, could falsify it or verify it? To test a law, one would want to measure functional information over time in a system and see if it increases. But given the measurement issue, this is problematic. We might detect proxies – for instance, in the laboratory we might run digital evolution or chemical evolution experiments and observe rising complexity – but critics say this falls short of a general proof. Walker remains “skeptical until some metrology is done”, essentially challenging Hazen’s team to propose a clear observable or quantity that can be tracked objectively as “functional information” in a given system.

Another criticism is whether this idea is really a new law of nature or just a reframing of known principles. Detractors might say: of course stable things persist (that’s just thermodynamics) and of course complex life arose (that’s Darwinian evolution). By stretching “evolution” to cover stars and minerals, are we adding any predictive power, or just using metaphor? Hazen and Wong counter that there is something gained: a unifying view that highlights a common cause behind disparate processes. But to convince skeptics, the theory must yield insights or predictions that more conventional science might miss.

Hazen acknowledges the difficulties, but he argues that even without exact calculation, the concept of functional information can guide understanding. He points out an analogy: we cannot precisely solve the $N$-body gravitational problem for many interacting planets or asteroids – it’s “impossible to calculate” the exact trajectories long-term – yet Newton’s laws still let us understand and approximate the system enough to send spacecraft through the asteroid belt. Similarly, we might not compute a cell’s functional information down to the last bit, but we can qualitatively discuss which systems have more or less FI, or how FI could change under different conditions. In Hazen’s view, lack of precise measurability does not invalidate the existence of a real phenomenon. We often work with quantities in science (like “complexity” itself, or entropy in certain cases) that are hard to measure directly but can be reasoned about or bounded.

One avenue to make the idea more testable is to find signatures of selection for function in complex chemical mixtures or planetary environments. Wong suggests that life’s presence might be inferred from anomalies in chemical complexity. For example, on Earth, out of the enormous number of organic molecules that could exist, life uses a relatively narrow selection (with particular patterns, like homochirality and recurring building blocks). There is far more of certain molecules (like glucose or amino acids) than random chemistry would produce. This is because life selects and amplifies specific functional molecules. If we found, say, an alien ocean with an unusual abundance of a particular complex molecule that thermodynamics alone wouldn’t favor, it could be a biosignature indicating selection for function. Interestingly, assembly theory – the alternative by Walker and Lee Cronin – makes a similar prediction: if you detect molecules with very high assembly index (meaning they require many steps to build), they are likely products of biological or other evolved processes, not chance chemistry. In this way, different theoretical frameworks agree that life betrays itself by the complexity of the chemical information it produces.

It’s also worth noting that the law of increasing functional information, as stated, is unfalsifiable only if misinterpreted. It does not say “complexity always increases everywhere” – clearly there are local reversals and extinctions. It says that if the preconditions of variation and selection are present, then complexity will tend to increase. If one really wanted to challenge it, one could look for systems where those conditions hold, yet no increase in functional complexity is observed over long timescales. So far, examples are lacking – but that may be because we haven’t been looking or because life is the only vigorous example in our reach. This is an ongoing debate, essentially asking: is the rise of complexity as inevitable as it seems, or are we generalizing from one data point (Earth)? The Hazen-Wong team has tried to generalize by examining minerals, planetary chemistry, and simulations, but more work is needed to nail down the law quantitatively.

Alternative Approaches and Future Directions

The discussion around functional information is part of a broader movement to understand “complexity science” and possibly discover new laws that govern it. One alternative framework mentioned is Assembly Theory, proposed by Lee Cronin and Sara Walker. Assembly theory takes a different angle: instead of focusing on function, it looks at the structural complexity of objects – specifically, how many steps of combining simpler subunits are needed to produce a given object (its assembly index). An object like a complex organic molecule that requires many synthetic steps (cuts and joins of smaller molecules) has a high assembly index. This measure can be experimentally estimated by fragmenting the molecule and analyzing its pieces. The theory posits that objects with high assembly index are extremely unlikely to form spontaneously, so if we observe them (e.g. in samples from another planet), it likely indicates an evolutionary process was at work (a potential biosignature).

While assembly theory differs from functional information in method, both approaches attempt to quantify “organized complexity” in a way that captures life’s signature. Both acknowledge that living systems occupy a tiny, special subset of all possible configurations – whether defined by function or by complex assembly. Walker agrees that new laws for living (or evolving) systems are needed, even if they may differ from standard physics. Her caution on the FI law is mostly about testability, not dismissing the core idea that evolution has a law-like character. In fact, multiple research groups converging on these questions suggests a growing consensus that information and complexity should be considered fundamental aspects of nature, much like energy or entropy.

Hazen’s group remains actively engaged with scientists across disciplines – from economists to cancer researchers – who see parallels in their systems. The notion of “selection for function” might, for instance, shed light on cancer evolution (where tumor cells are selected for functions like rapid growth or drug resistance in a non-Darwinian but adaptive way). It might inspire new ways to analyze cultural or technological evolution, where ideas or innovations get selected for their functions in society. The law of increasing functional information, if valid, would mean that wherever variation and selection exist, we should expect a rise in organized complexity – be it in languages, economies, or even knowledge itself. These are speculative extensions, but they show how the concept is stimulating fresh thinking in many fields. Researchers are now challenged to find quantitative metrics and empirical studies to further support (or falsify) this paradigm.

Implications for Life in the Universe

Perhaps the most profound implication of functional information is for the question of life beyond Earth. If complexity naturally accumulates given the right conditions, then the emergence of life might be a common cosmic occurrence, not a one-in-a-trillion fluke. In other words, life (and even intelligence) could be inevitable on wet, rocky planets over long timescales, rather than freakishly improbable. As Wong and Lunine argue, if increasing functional complexity is driven by a law, “we might expect life to be a common outcome of planetary evolution.” This stands in contrast to the view of the late biologist Ernst Mayr, who felt that even if simple life arises, the path to human-like intelligence was so contingent and unlikely that we are probably alone. The new framework suggests instead that while the timing of key transitions is unpredictable, the trend toward greater complexity is baked into the fabric of nature. Under similar selection pressures, independent biospheres might undergo analogous major transitions (though not identical species, of course). This doesn’t guarantee that the galaxy is teeming with star-faring civilizations – many other factors (environmental catastrophes, etc.) come into play – but it shifts the perspective to a more optimistic one: given life’s start, complexity (up to and including intelligence) has a propensity to escalate.

Another implication is philosophical: it elevates information to a role as a fundamental ingredient of reality. Wong mused that “information itself might be a vital parameter of the cosmos, similar to mass, charge and energy.” In a sense, functional information links the abstract realm of information theory to the concrete emergence of purpose and function in the universe. This idea resonates with efforts in physics and cosmology to understand whether the universe has an inherent tendency to create organized structures (sometimes discussed under concepts like “self-organization” or even more teleological notions). Without invoking any mysticism, the law of increasing FI provides a naturalistic explanation for a kind of directionality or progressive structure-building in the cosmos, woven from the interplay of randomness and selection.

For humanity, recognizing such a law can influence how we see our role. If indeed evolution is a universal process extending to culture and technology, then our creative endeavors and the growth of knowledge might also be viewed through functional information. There is a feedback loop between our consciousness and the evolutionary process: Hazen points out that once cognition enters the picture, evolution can become self-directed to an extent (the “watchmaker” is no longer blind). Humans, in developing technology, medicine, and science, are effectively accelerating the accumulation of functional information, consciously recombining ideas and searching solution spaces. Some thinkers suggest this could lead to an open-ended future, where life’s complexity continues to surge (possibly via AI, space colonization, etc.), in line with the cosmic principle. These remain speculative, but they demonstrate how a principle like this can inform big-picture narratives about the future.

Conclusion

Functional information is a concept that reframes how we define and measure complexity by rooting it in function and selection. In simple terms, it asks: “Out of all the possible ways this system could be arranged, how special are the ones that actually achieve something?” A high functional information means very special – only rare configurations succeed – indicating a lot of complexity tailored to a purpose. Born in the context of biopolymers and evolution experiments, this idea has grown into a worldview in which evolution is a universal cosmic process, not just an Earthly anomaly. The proposed Law of Increasing Functional Information encapsulates this view, asserting that whenever diverse configurations compete and are selected by function, the winners will drive the system to new heights of organized complexity.

This perspective elegantly links the physical and the biological, showing how stars forging elements, minerals diversifying in Earth’s crust, and organisms evolving new capabilities can all be seen as instances of the same fundamental phenomenon – selection for function leading to complexity. It offers a possible answer to “Why does the universe become more complex over time?” and invites us to consider information (especially functional information) as a quantity as pivotal as energy in describing nature’s trajectory.

However, being a new and interdisciplinary idea, it faces healthy skepticism. The challenge is to make these notions testable and quantitative, and to avoid overextending metaphors. Ongoing research aims to find tangible metrics (like chemical biosignatures of selection) and to apply the framework to different systems, from cancer tumors to economies, to see if it yields explanatory power. Even if the exact "law" evolves or is refined, the effort to integrate evolutionary concepts into physics is itself valuable. It echoes the development of thermodynamics in the 19th century – where studies of steam engines led to deep truths about time and order in the universe. We may likewise find that studying functional information and complexity will unlock “hidden truths” about the direction of time, the emergence of purpose, and the fate of complex systems.

In the end, what is functional information? It is a measure, a law, and perhaps a guiding principle that explains how simple parts organize into complex wholes by the discovery of function. It tells us that complexity is not an accident but arises naturally when the universe explores possibilities and chooses what works. This idea elevates our understanding of evolution from a biological theory to a cosmic principle – a principle that might ultimately help us find our place in a complex, information-rich universe. As truth-seekers, appreciating functional information moves us one step closer to discerning the eternal patterns through which matter and life co-create, showing that the rise of complexity is woven into the fabric of reality itself.

Sources: Functional information defined and discussed in Hazen et al., PNAS 2007; Hazen & Wong's Law of Increasing Functional Information in PNAS 2023 and related commentary; Quanta/Wired coverage by Philip Ball for broader context and quotes; additional insights on complexity and evolution from Sci.News and Reuters.

Coda - Context‐Dependence Revisited

Functional information (FI) is never absolute: it is probability mass that the universe “carves out” around a chosen goal. Mathematically,

$I(Ex) = −log2F(Ex)$ measures how rarely a randomly chosen configuration reaches or exceeds a performance threshold and must be evaluated inside a specified environment. Change the goal or the conditions and the rarity changes, so the same molecule, circuit, or planetary mineral can jump from “high-FI” to “low-FI” or vice-versa. This is why Szostak’s RNA aptamer that binds caffeine has a large FI for caffeine binding but almost none for, say, ATP binding; the search space and success set are different en.wikipedia.org.

The Quanta article makes the same point at the planetary scale: as Earth’s surface chemistry, pressure and biology shifted, the subset of minerals that could persist — the function “long-term stability under present conditions” — changed, so their aggregate FI had to be recalculated epoch by epoch quantamagazine.org.

Who supplies the context? Observers and selectors

In the laboratory the observer supplies the function (“bind my target within $K<sub>D</sub> ≤ 10 nM$”).

In nature, the environment plays the role of an implicit observer: a desert “asks” minerals to survive desiccation; a tumor micro-environment “asks” cancer cells to outrun immune attack.

Whichever entity imposes the performance test is effectively measuring functional information. Without that reference frame, FI is undefined — just like “fitness” is meaningless without an ecological niche.

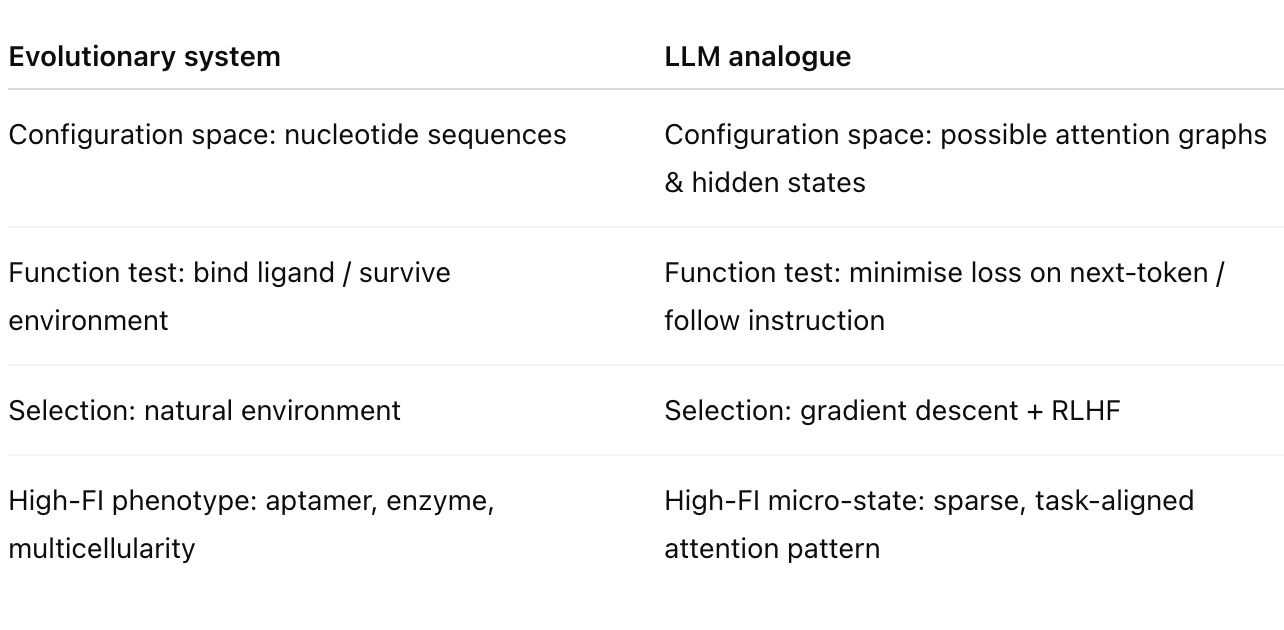

Attention in LLMs as an information–for–function filter

Self-attention was introduced precisely to let a model “decide which words matter for the current prediction” sebastianraschka.comai21.com.

Internally, each query-key score answers the question:

Given the function “predict the next token (or follow an instruction) in this precise context,”

which other tokens carry the most useful information?

The soft-maxed attention weights sparsify the representation so that only a tiny fraction of all possible token relations is retained. In FI language:

- Configuration space = all possible ways to couple every pair of tokens.

- Function = minimise surprise (cross-entropy) on the next token.

- Attention = dynamic selection of the rare coupling pattern that maximises that function.

Because the relevant pattern is rare in the combinatorial space of all pairwise interactions, the attended state has high FI relative to that function.

Context shifts → FI shifts → Attention re-allocation

Ask the same LLM passage “translate” versus “summarise.”

Function flips, so the optimal subset of tokens changes: translation heads weight lexical correspondences; summarisation heads weight thematic sentences. The FI of any internal representation is therefore conditional on the user’s instruction. The prompt acts as an external observer that redefines success.

Likewise, prompt injections or RL-HF fine-tuning change the selection criterion, forcing different heads or layers to specialise. The model’s parameters get retuned so that high-FI micro-states (attention patterns, feed-forward activations) are those that now satisfy the newly imposed function (e.g., “be helpful, harmless, honest”).

A concrete mapping

In both cases probabilistic search + selective retention drives the system into ever-smaller regions of its state space that achieve the task. FI is a way of quantifying how far that pruning has progressed.

Observer dependence, Landauer, and physical instantiation

Landauer’s dictum that “information is physical” reminds us that some degree of freedom must incur real energetic cost to hold the bit. In LLM hardware the observer’s “question” is embodied as a gradient signal; energy is dissipated adjusting the weights so that future forward passes route activity through higher-FI paths for the target function. The physical cost of storing and updating those weights is the thermodynamic shadow of the contextual definition of FI scottaaronson.blog.

Research directions bridging FI and attention

- Mutual-information audits: Measure how much a head’s output reduces the uncertainty (in bits) of the model’s next token under different user-supplied tasks. This is an empirical FI proxy.

- Contextual FI landscapes: Treat each instruction type as a distinct fitness landscape; track how fine-tuning moves attention distributions toward higher FI basins.

- Observer-adaptive models: Develop meta-controllers that explicitly choose which internal heads/layers to activate, maximising FI with respect to a live-specified reward — akin to organisms switching gene-regulatory networks when the environment flips.

- Energetic accounting: Relate FLOP-level energy costs to the marginal FI gain per parameter update, testing whether Landauer-style bounds emerge in practice.

Take-away

Functional information is context-bound because “function” is always a question posed by an observer.

In large language models, attention is the machinery that answers that question on the fly, sifting through combinatorial possibilities to preserve only the bits that matter for the present goal. Recognising this parallel helps demystify both biological evolution and transformer intelligence: in each, selection acts as an interrogator, and information becomes “functional” only relative to the answer sought.

AI Reasoning

ChatGPT o3