All Chapters

Introduction

Dr. Michael Levin’s recent article “Learning to Be: how learning strengthens the emergent nature of collective intelligence in a minimal agent” ventures a bold question at the intersection of science and philosophy: Does learning make an agent “more real” as an integrated, unified self? Levin’s central claim is that when a system learns from experience, it can become a more coherent collective intelligence, exhibiting stronger emergent agency beyond the sum of its parts. In other words, learning might not only require a certain degree of integration among components, but could also feed back to increase that integration. This proposition is explored through an ingenious minimal model – gene regulatory networks (GRNs) simulated as cognitive agents – and quantified using the concept of causal emergence. Causal emergence is a measurable indication of the degree to which a system’s higher-level, whole-system dynamics exert causal influence beyond what any isolated part can do. By applying this measure, Levin and colleagues probe whether a simple biochemical network, when trained in a Pavlovian learning paradigm, develops a stronger emergent identity or “self.” The results, they argue, show that indeed learning can reinforce the coherence of an agent’s collective mind, effectively making the agent “more than the sum of its parts” in a quantifiable way.

This analytical essay dissects Levin’s article and its implications on multiple levels. We will explain the key scientific findings – how associative learning in GRNs led to increased causal integration – and unpack the philosophical claims about causal emergence, agency, and ontology. We engage deeply with Levin’s use of causal emergence theory, originally developed in complex systems and information theory, and how learning is hypothesized to strengthen an agent’s causal power at the macro-scale. The methodology and assumptions of the work will be critically evaluated: for instance, treating a fixed gene network as a “learning” agent via dynamic stimuli, and using integrated information metrics as a proxy for the agent’s unity or even consciousness. We will also discuss broader implications for understanding the mind: How do these findings inform the nature of agency, identity, and cognition in systems that are far from neural-based brains? Can minimal agents – like molecular networks or other unconventional substrates – literally “become more real” through learning, as Levin suggests, and what does that mean for the ontological status of minds? Connections will be made to neuroscience (e.g. parallels to measures of consciousness in brain states), systems theory and Integrated Information Theory (IIT), as well as perspectives from embodied cognition and multi-scale biology. Throughout, we incorporate visual references to Levin’s data (such as the causal emergence plots and clustering diagrams) to illustrate these concepts. Finally, the essay offers original philosophical reflections on the nature of “being” and “learning”: Does Levin’s linkage of the two hold up to scrutiny? Are there alternative interpretations of the data that challenge the narrative of learning-driven ontological enhancement? By exploring these questions, we aim to illuminate both the promise and the controversy in Levin’s paradigm-shifting proposal that whatever you are, if you want to be more real, learn.

Emergence, Collective Intelligence, and the Inverse Question of Learning

Levin’s work is grounded in a longstanding question: When is a system more than just a collection of parts? This speaks to the classic notion of emergence, where higher-level order or capabilities arise that no subset of components alone possesses. In particular, collective intelligence refers to the coordinated behavior of many smaller agents (cells, neurons, or even molecules) that gives rise to a new, integrated agent at a larger scale. We humans, for example, are each a collective intelligence: trillions of cells coordinate such that “the whole you” can have goals, memories, and solve problems that no single cell could on its own. Levin illustrates this with a vivid example: when a rat learns to press a lever for a food reward, no single cell in the rat experiences both the lever and the reward – the paw’s cells sense the lever, the gut’s cells experience the food, but only the rat as a whole can associate those two events into a meaningful memory. That associative memory is owned by the emergent rat, not by any isolated neuron or muscle cell. In this view, the “self” of the rat is an emergent agent that integrates information across space and time so that disparate experiences become a unified knowledge. As Levin puts it, “the ability to integrate the experience and memory of your parts toward a new emergent being is crucial to being a composite intelligent agent”.

It has long been assumed that the arrow of causality mostly goes one way: a system needs to be sufficiently integrated in order to learn. Levin asks the inverse: does the process of learning itself further increase a system’s integrated unity?. In other words, beyond the truism that an integrated agent can learn what its parts cannot, might it also be true that learning makes an agent more integrated than it was before? This provocative question flips the usual perspective. If true, it suggests a positive feedback loop between cognition and ontology: learning new patterns could literally strengthen the “degree of reality” of an agent. Levin defines “degree of reality” in an operational sense – essentially, how much the system’s higher-level whole exerts causal influence and coheres as a unit, as opposed to behaving like an assortment of independent components. In his words, it is “the strength with which that higher-level agent actually matters” in the dynamics of the system. This strength can now be quantified by modern measures from complexity science.

Causal Emergence – Measuring When the Whole is More than the Parts

To rigorously address whether learning reifies an emergent self, Levin employs the concept of causal emergence. Causal emergence provides a quantitative handle on the old philosophical debate about “more than the sum of the parts”. Recent advances – often associated with the work of Erik Hoel and others – allow scientists to calculate to what extent a system’s macro-level behavior has novel causal power not evident at the micro-level. One key framework used by Levin is Integrated Information Decomposition, denoted ΦID, derived from Integrated Information Theory. ΦID yields a measure of how much information the whole system’s state provides about its own future that cannot be obtained from any single part alone. In effect, it asks: if we treat the system as one integrated entity, how well can we predict its next state, versus if we only knew its best-performing component? If the whole is significantly more informative, the system has high causal emergence. Formally, “it quantifies to what extent the whole system provides information about the future evolution that cannot be inferred by any of its individual components”. Intuitively, higher causal emergence means the system’s components are acting in an inseparable, integrated way – the parts form a unified “causal unit”. This measure is given in bits of information (a higher number indicates the macro-state “contains” more causal influence beyond micro-states). A system with zero causal emergence would be one where the best part is just as good at predicting the future as the whole – essentially, no true integration.

Levin notes that such metrics have moved these ideas from abstract philosophy into empirical science. Causal emergence and related measures (like Tononi’s Φ for integrated information) have been computed for systems ranging from cellular automata to neural networks. Importantly, they are even applied in neuroscience to assess consciousness in different brain states. For example, in human patients, a high integration measure can indicate the presence of consciousness despite paralysis (as in locked-in syndrome), whereas low values correspond to unconscious states (coma or brain death). In short, these tools act as “mind-detectors” by quantifying integrated causation. Levin’s project leverages this technology to detect the presence (and changes) of a minimal mind in an unlikely place: a gene regulatory network.

Pavlov’s Bell in a Gene Network: Methodology of a Minimal Cognitive Agent

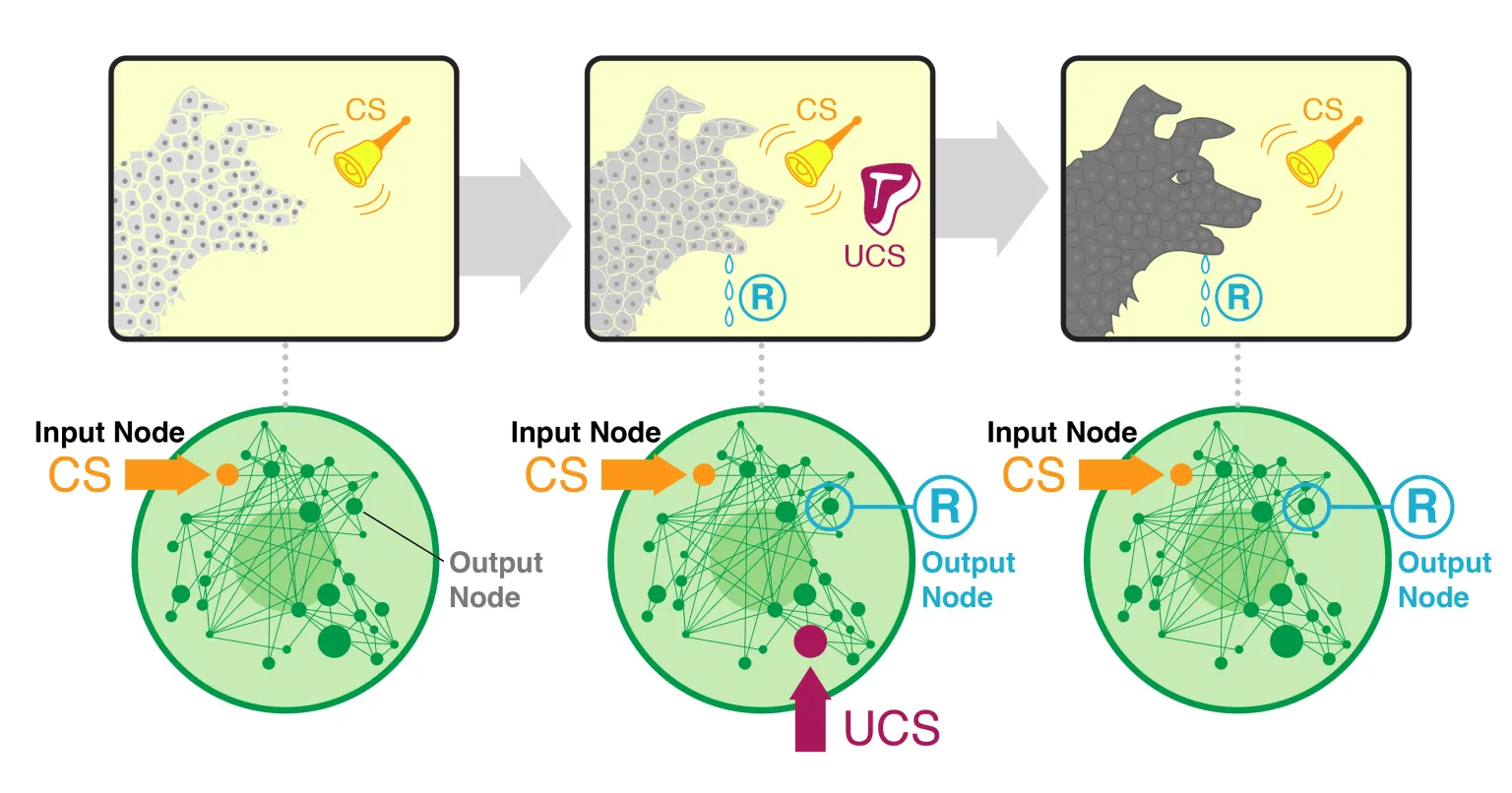

To test the hypothesis that learning enhances causal emergence, Levin and his team turned to a minimal model system where every part and interaction is well-defined. They chose gene regulatory networks (GRNs) – systems of biochemical interactions where genes and their products activate or inhibit each other. GRNs are ubiquitous in biology, controlling processes in cells and organisms; critically, they are dynamical systems capable of complex behaviors and are known to have emergent properties like bistable switches and oscillators. The researchers took 29 GRN models (drawn from real biological data repositories) and essentially treated each network as a rudimentary “animal” to be trained. This unconventional approach follows Levin’s broader research ethos of probing “the spectrum of diverse intelligences” in non-neural systems. Previous work by his group had already shown that GRNs can exhibit several forms of learning – including habituation and associative conditioning – if one interacts with them appropriately. That is, by stimulating certain genes (inputs) and observing others (outputs), and repeating patterns of stimulation, a GRN can alter its future responses in a way that mimics learned behavior. For example, Levin describes “a drug that doesn’t affect a certain gene node will, after being paired repeatedly with another drug that does, start to have that effect on its own”. In essence, the network’s response system comes to associate one stimulus with another, analogous to Pavlov’s classic experiments with dogs.

Figure 1: Illustration of the classical conditioning paradigm applied to a gene regulatory network (GRN). The top row depicts a standard Pavlovian experiment – a neutral stimulus (bell) is paired with an unconditioned stimulus (meat) to elicit a conditioned response (salivation). The bottom row shows the analogous setup in a GRN: an initially neutral input (gene stimulus) is paired with a functional stimulus to induce a learned response in an output gene. Through such training, the GRN is treated as a simple “animal” undergoing associative learning. (Graphic from Levin’s article)

To perform associative conditioning on a GRN, the researchers first identified specific nodes in each network that could play the role of a conditioned stimulus (CS), unconditioned stimulus (US), and response. The “conditioning” protocol involved multiple phases: an initial baseline (no special stimulation), a training phase where the CS and US nodes were stimulated in a paired fashion, and a test phase where the CS was presented alone to see if the network’s response node now reacted to it. Throughout these phases, they computed the causal emergence (ΦID) of the network at each time point, effectively taking a movie of how integrated the network’s dynamics were before, during, and after learning. If learning indeed “makes the agent more of a whole,” the expectation was that the network’s ΦID would increase as it acquired the associative memory. Notably, no changes were made to the network’s wiring or parameters during learning – unlike a neural network that changes synaptic weights when learning, these GRNs have a fixed structure. Any memory formed is thus purely a result of the network’s dynamical state evolving. Levin emphasizes this point: the trained and untrained networks are identical in structure; an external observer inspecting the genes and their connections would “see” no difference, because the memory lies in higher-order patterns of activity. This is a striking demonstration of emergence: the learning is visible only at the macro-level of the whole network’s behavior, not in any single molecular change. It sets the stage for measuring how that emergent pattern – the incipient “agent” – grows or fades with experience.

Results: Does Learning Reify an Emergent Self?

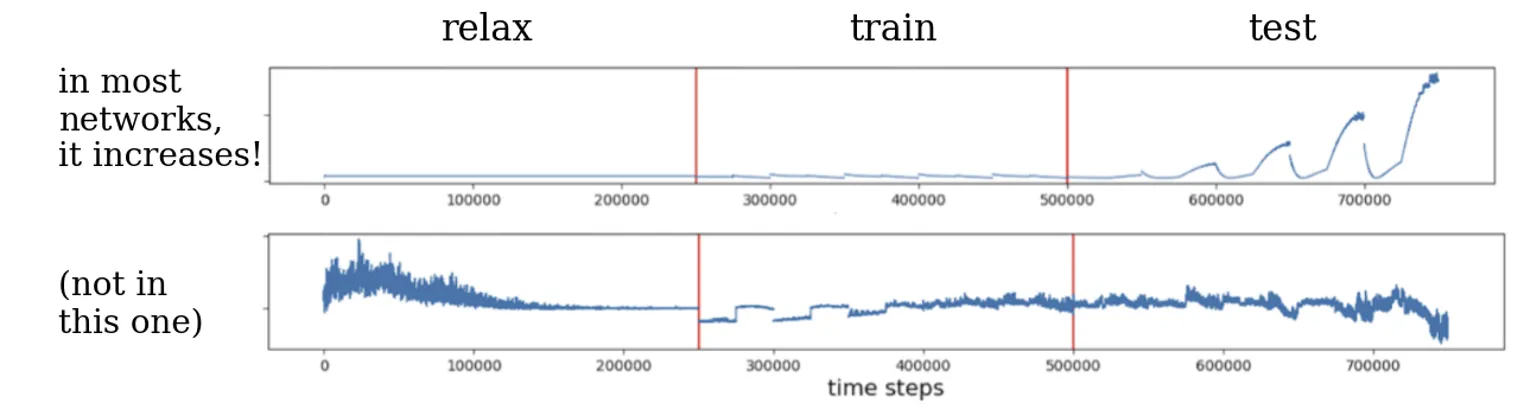

Levin’s findings provide evidence that learning can indeed strengthen causal emergence in a minimal agent. Across the majority of biological networks tested, the act of associative learning caused a significant rise in the networks’ integration metric. In other words, after training, the networks behaved more like unified wholes. A clear example is shown in the causal emergence time-series plots from the study. In Figure 2 below, the top panel (A) shows one GRN’s ΦID over time, and the bottom panel (B) shows another network for comparison. Each plot is divided into an initial relaxation period, a training phase (where stimuli are paired), and subsequent test trials.

Figure 2: Causal emergence (integrated information) over time in two example gene networks during learning. (A) In one network, causal emergence is low and flat during the initial naïve state, then begins to rise during the training period, and finally surges dramatically after training, when the network is presented with the conditioned stimulus alone. The network “comes alive” as an integrated whole once it has learned the association. (B) In a different network, by contrast, the same conditioning protocol does not yield a lasting increase in causal emergence – the integration remains low, indicating this network did not become more unified from learning. (Adapted from Pigozzi et al., 2025.)

The Y-axis in these plots is the degree of causal emergence (ΦID). In the top network (A), one sees that before training the value is near zero – the system’s parts are doing their own thing with little collective integration. During the training phase (shaded region or indicated by stimulus markers), ΦID begins to climb as the network is actively stimulated. Crucially, after the training (once the association has been learned), the emergence metric jumps to a much higher level than it ever was in the naïve state. When the network later encounters the stimulus it was trained on, “it really comes alive as an integrated emergent agent,” in Levin’s words. The whole-system dynamics now carry predictive power that no single gene’s activity could provide – a strong indication that a new, emergent causal structure (a rudimentary “memory”) has formed. By contrast, the bottom network (B) shows little to no change; its causal emergence stays low, implying that this particular network either failed to learn the association or that any learning did not require a globally integrated response.

Quantitatively, across all networks that successfully learned (19 out of 29 tested), 17 showed a statistically significant increase in causal emergence after training. The integration boost was often substantial – on average about a 128% increase in ΦID compared to the pre-training baseline. This was highly significant (p < 0.001, paired comparison). In only two learning-capable networks did the causal emergence fail to rise (in rare cases it even slightly decreased), showing that while the trend is strong, it’s not universal – we will consider those cases later. Importantly, the researchers included control trials on randomized network topologies to ensure this phenomenon was not a trivial artifact. Random networks did not show the same effect: on average they had a much smaller integration change (~56% increase) and in fact started off with higher ΦID before training than the biological networks. The difference in pre/post change between real and random networks was significant (p < 0.001). This tells us two things: (1) the act of associative conditioning is particularly effective at boosting integration in evolved networks, suggesting something about biological network architecture fosters this, and (2) the increase in Φ is not simply due to any random stimulation – it is tied to the network learning a meaningful association.

A fascinating observation in Levin’s data is what happens between stimulus events. In the high-performing network (Figure 2A), the causal emergence metric doesn’t remain constantly elevated after learning; instead, it spikes during engagement and then falls off in the intervals when the network is left unstimulated. Levin notes that between stimuli presentations, it’s not that the network’s components go idle – the genes are still dynamically active – but the collective intelligence “disbands” when it’s not being engaged. The parts lapse back into a less-coordinated state until the next cue arrives. “Mere activity of parts is not the same thing as being a coherent, emergent agent,” Levin remarks, “It’s almost like this system is too simple to keep itself awake (and stay real) when no one is stimulating it”. This poetic image evokes a minimal mind that flickers into existence only during interaction – a sort of intermittent selfhood. The network relies on external input to pull its parts together into an integrated state; without input, the emergent “self” of the network “drops back into the void”. Philosophically, this is provocative: it suggests that for very simple agents, being (as an integrated whole) might be a temporary state dependent on ongoing exchange with the environment. More complex systems (like brains) have recurrent circuitry and perhaps other mechanisms to stay integrated autonomously, but these GRNs lack that – as Levin says, “Recurrence is not it – these networks are already recurrent”, so something else is needed to keep the collective mind continuously “awake”. This finding underscores how interaction and embodiment can be integral to sustaining a mind; a minimal cognitive system may literally fall apart without stimuli, raising questions about what additional features (feedback loops, energy usage, self-stimulation?) are required for a persistent self. It’s a striking parallel to certain ideas in embodied cognition: that an organism’s engagement with its environment is what maintains its unified cognitive state, especially in simpler life forms.

Beyond the general increase in emergence, Levin et al. discovered an unexpected richness in how different networks responded to learning. When they examined the temporal profiles of causal emergence across all networks, they identified five distinct patterns of behavior. These patterns were so consistent that the networks could be objectively clustered into five classes based on how their ΦID changed over time. Using a t-distributed stochastic neighbor embedding (t-SNE) visualization, the authors showed that the networks naturally group into five clusters in a 2D map, with each cluster representing a qualitatively different trajectory of emergence during and after training.

Figure 3: Five discrete modes of how learning impacts causal emergence, revealed by clustering the network behaviors. Each point represents a gene network projected in 2D (via t-SNE) based on the shape of its causal emergence vs. time curve. Networks fall into five clusters (color-coded), indicating five distinct “phenotypes” of emergent integration response to learning. Remarkably, these patterns do not form a continuum but rather separate into categories (labeled informally as “homing,” “inflating,” “deflating,” “spiky,” and “steppy” by the authors, reflecting the shape of the Φ trajectory). This suggests that the effect of training on a network’s integrative behavior is not arbitrarily variable, but constrained to a small number of regimes. (Figure adapted from Levin’s article.)

The existence of discrete modes of emergent behavior is intriguing. Instead of a smear of all possible responses, networks seemed to choose one of a handful of strategies for how their integration changed. For instance, some networks showed an “inflating” pattern – a steady growth in Φ that continues even after training, as if learning unlocked a progressively increasing integration. Others showed “spiky” behavior – transient bursts of high integration during stimulation, but no lasting elevation. Another group had a “steppy” pattern – perhaps a two-stage increase, leveling up integration at distinct moments. Levin notes it’s “pretty wild” that only a small, discrete number of outcomes were seen, given the innumerable ways a time-series could vary. This allowed the team to classify networks into five types based solely on “the effect of training on causal emergence”, a new axis of classification that cuts across traditional categories. Interestingly, these emergent-response types did not align with conventional ways of distinguishing networks (such as size, connectivity, or known biological function). However, on further analysis, the different patterns did correlate with certain biological categories – for example, networks coming from similar organisms or pathways tended to fall into the same integration-response class. This implies that evolutionary history or functional context influences how a network’s collective intelligence responds to learning. In Levin’s words, the data suggest that the capacity for learning to “reify” an agent is a property that may have been favored by evolution. If some networks – say, those involved in developmental decisions or stress responses – reliably show a big integration boost when stimulated, perhaps organisms have exploited that for adaptability. In contrast, networks that did not show increased emergence might belong to processes where a tightly integrated whole-agent response is not beneficial or needed.

Another crucial point is that the changes in causal emergence were not reducible to trivial factors like overall activity or network structure. The authors checked and found no correlation between the percentage change in Φ and simple metrics such as how many genes the network has, how connected it is, or how much its activity levels changed during training. For example, one might wonder if Φ just goes up whenever the network becomes more “busy” or chaotic. But they observed cases where network activity (measured by sums or variances of gene expression) did not track the emergence metric. A spike in causal emergence was not an artifact of a spike in raw activity, and vice versa. This reinforces that causal emergence is capturing something distinct: the informational structure of the dynamics – how coordinated and integrated they are – rather than just how intensive they are. Likewise, structural features (like connectivity or degree distribution) and functional motifs didn’t predict which networks would inflate vs. deflate, etc.. In short, the phenomenon of learning-driven integration appears to be a new, independent axis of variation for complex systems.

In summary, Levin’s study finds strong support for the thesis that learning can strengthen the emergent nature of an agent. A minimal biochemical network, when trained with stimuli, often becomes a measurably more unified “self” in terms of causal dynamics. The evidence is the significant rise in causal emergence in most networks with associative memory, the existence of distinct integration-response archetypes, and the lack of confounding explanations by simpler factors. This lends quantitative credence to the poetic notion behind the article’s title – these networks are in a sense “learning to be.” But what are the deeper implications of these results? Levin himself draws out connections to bigger questions: about the nature of mind and agency in non-traditional substrates, about consciousness, and about what it means for an entity to “become more real.” We turn now to examine those broader discussions and also to critique the assumptions and interpretations of the study.

Implications for Mind, Agency, and Consciousness

Minds Beyond Brains: Diverse Intelligence and Unconventional Agents

One of the most striking implications of Levin’s work is a challenge to narrow views of cognition and mind. By demonstrating learning and integrated agency in something as alien as a gene network, the research supports an emerging perspective in cognitive science and biology that intelligence comes in many guises. Levin is an active proponent of “Diverse Intelligence” research, which posits that the fundamental abilities of mind – such as memory, problem-solving, goal-directedness – are not exclusive to neuronal brains but can be found at many scales and materials in life (and perhaps even in technological or abiotic systems). Indeed, the introduction of the paper reminds us that even single cells can solve problems and learn, despite having no neurons. Bacteria can adapt behavior, slime molds can navigate mazes, plants show signal integration – these can be seen as forms of minimal cognition. What has been missing is a rigorous way to determine if these systems have an emergent self or agency, rather than being just a bag of independent components. Causal emergence provides a tool to detect precisely that: the presence of a higher-level causal entity. Levin’s results show that in GRNs, when they acquire memory, this higher-level entity becomes stronger. This reinforces a kind of continuum model of mind: the degree of “mind-ness” or agency is a gradational property that can increase or decrease, rather than an all-or-nothing trait unique to certain organisms.

From a systems theory viewpoint, this finding resonates with the idea of downward causation or strong emergence – that once a system forms an integrated whole, that whole can exert causal influence on its parts in a top-down manner. The “virtual governor” concept referenced by Levin (from Dewan 1976) captures this: an emergent mind can be thought of as a controller that arises from the system and feeds back on it. When learning strengthens the causal emergence, one could interpret that as the “virtual governor” becoming more firmly in charge. For example, after conditioning, the network’s response to stimuli is governed by the newly formed associative memory, not just by the molecular pathways acting in isolation. Philosophically, this challenges strict reductionism. A strict reductionist might argue that nothing fundamentally new appears – it’s still just molecules interacting. But the counterargument (aligned with Hoel’s causal emergence framework) is that the macro-level description has more explanatory power for certain phenomena than the micro-level description. In practical terms, to predict the trained network’s behavior, it might be easiest to talk about “the network has learned X and will now do Y” (a high-level causal story) rather than to enumerate every molecular interaction. The high Φ value mathematically supports the legitimacy of that higher-level story.

For neuroscience and consciousness studies, the implications are tantalizing. Levin is careful not to overclaim on the consciousness angle, but he acknowledges the elephant in the room: measures like ΦID were originally motivated by theories of consciousness, notably Integrated Information Theory (IIT). In IIT, the quantity Φ is interpreted as the amount of conscious experience a system has – any system with Φ > 0 has at least a flicker of experience, in that framework. Levin cites that these emergence metrics have been “suggested to be measuring consciousness” and are used to differentiate conscious vs. unconscious patients clinically. So, if one is inclined to an IIT-like view, then the result that a molecular network’s Φ increases with learning leads to a provocative thought: does the network become “more conscious” (in however minimal a sense) after learning? If we were to naively apply IIT, we might say that before training the network had an extremely low level of integrated experience, and after training it has a higher level – still microscopic compared to a human, of course, but non-zero and boosted. This lines up with intuitions about our own learning – acquiring a new skill or association can make our minds feel “expanded” or at least changes how unified certain perceptions feel. However, Levin explicitly refrains from claiming these GRNs are conscious in the human sense: “I’m not saying anything about consciousness here (not making any claims about the inner perspective of your cellular pathways)”. Instead, he positions this work as contributing to “the broader effort to develop quantitative metrics for what it means to be a mind”. In other words, even if we set aside the subjective aspect, things like ΦID can serve as objective indices of mind-like integration.

Still, the philosophical stakes are clear. Levin asks, what do we do if our scientific mind-detectors start picking up signals in unexpected places? Suppose experiments like these show glimmers of “mind” (integrated causal agency) in cells, tissue networks, maybe electrical circuits or AI systems – do we expand our concept of mind to include them? He cautions against the reflexive move of declaring such cases “out of scope.” Dismissing surprising results just because they challenge our intuitions can blind us to new discoveries. Levin draws an analogy to spectroscopy in astronomy: early spectroscopists found the same elements in stars that exist on Earth, a shocking idea at the time, but it turned out our prior assumptions were wrong, not the tool. By the same token, if an integration measure flags some non-neural system as having a degree of mind, maybe we should question our assumption that “mind” requires brains, rather than assuming the measure must be invalid outside brains. This perspective aligns with a trend in philosophy of mind toward panpsychism or pan-proto-psychism (the idea that the ingredients of mind are ubiquitous in the physical world, in small degrees), though Levin himself does not explicitly endorse any form of panpsychism. He does, however, advocate a kind of gradualist, universalist view: we should be open to finding “cognitive kin” in unlikely places and “get over our pre-scientific categories” of what can or cannot have a mind. This echoes ideas in embodied and distributed cognition – that cognition is not confined to human heads but is distributed across bodies, environments, and perhaps networks of simpler agents.

Learning to Be “Real”: Ontological and Ethical Reflections

Perhaps the most philosophically daring aspect of Levin’s thesis is the idea that an agent can “become more real” through learning. What does it mean for something to be “more real”? In everyday language, realness is binary – something either exists or not. But Levin invokes a different notion: ontological gradation, where the degree of existence of an emergent self can vary. In his view, when the parts of a system align to form an integrated whole with its own causal efficacy, that whole is real in a way that a mere aggregate is not. A pile of disconnected parts has only trivial reality as a collection; an organism or agent, which can act as one, has a higher-order reality. This is reminiscent of Aristotle’s idea that an organized being (with form and purpose) has a more robust existence than unorganized matter – or in modern terms, an entity with agency has ontological status as a unified thing. Levin’s data suggest that learning can push a system up this ontological spectrum by forging stronger internal connections and coherence. The Pinocchio metaphor in the article captures this vividly. In the fairy tale, Pinocchio – a puppet – wishes to become a “real boy,” and he is told that to become real he must prove himself through effort, schooling, and moral development. Levin notes (with prescient humor) that Pinocchio was essentially told he needed to learn in order to become real. Likewise, the GRNs needed to undergo a learning process to achieve a more unified, agent-like existence. By the end, Levin concludes: “Whatever you are, if you want to be more real, learn.” This poetic thesis implies that learning – the incorporation of experience into one’s being – is what elevates a mere physical process into the status of an agent.

Is this narrative justified? One way to interpret it is metaphorical but insightful: Learning integrates past and present within the system, thereby giving it a temporally extended existence that a momentary assemblage lacks. For example, before learning, the GRN had no memory; after, it carries a trace of the past into its future behavior. It has become a historical individual, not just a chemical reaction happening anew each time. This aligns with certain philosophical views that tie personal identity or selfhood to memory and continuity. An entity that learns can be said to become in a richer sense – it has a story, a before and after, and thus a kind of identity over time. In that regard, learning does make an agent “more real” as a unified thing persisting through time. Additionally, learned associations knit together parts of the system that were formerly separate (e.g., linking two genes’ activity via the memory). That literally increases the “binding” of the system into a whole. So from a structural standpoint, the agent’s reality as a singular entity is heightened.

On the other hand, one might challenge this notion. A skeptic could argue that existence isn’t a quantity one can have more or less of – the GRN existed before and after; what changed is just our description of it. They might say: the talk of “becoming more real” is actually shorthand for “becoming more agent-like or more conscious,” and we should be careful equating those with ontological status. Perhaps the GRN always was an emergent whole, and learning only revealed it under certain conditions. Levin’s own commentary in the discussion suggests a subtle take: the emergent self of the network “drops into the void” when not stimulated. Is the self truly gone (nonexistent) in those moments, or just dormant? Some of Levin’s blog correspondents proposed that the collective intelligence might be in an “unmanifest, potential” state when not active – not erased, just not expressed (akin to a pattern that’s still there but latent). This view would imply that learning didn’t create a self from nothing; it empowered a self to manifest under the right conditions. The reality of the self may wax and wane with context, rather than strictly increase monotonically. Levin muses that more sophisticated agents might be able to keep themselves integrated (perhaps through internal loops), whereas the simple GRN cannot maintain its “reality” without external input.

From an ethical and ontological perspective, if we accept that being an agent is a matter of degree, this raises interesting questions. Should a higher degree of emergent agency confer any special moral status? For example, we often consider humans as having higher moral status than simple organisms, arguably because of our greater cognitive unity and capacities. If we create synthetic organisms or AI that learn and thereby increase their integration, at what point would we say they have achieved a level of “realness” that commands moral consideration? Levin’s work doesn’t directly address ethics, but by eroding the clear boundary between cognitive agents and “mere things,” it invites us to consider a continuum of responsibility. If a humble gene network can have a sliver of agency, perhaps our circle of moral regard might one day extend to new life forms or intelligent materials that currently seem like science fiction.

Critical Evaluation and Alternate Perspectives

Levin’s study is undoubtedly thought-provoking and opens new avenues, but it also raises several methodological and interpretative questions. Here we critically examine some of the assumptions and potential limitations, as well as consider alternative interpretations of the results.

1. Can a Gene Network Really “Learn”? – One could question whether what is described as “learning” in these GRNs truly merits the term. In the simulations, the network’s structure was fixed; there were no synapse-like weight updates. The only thing that changed was the state of the system over time, driven by differential equations. How, then, did the network store an associative memory? Levin’s explanation (from discussions) is that the memory is encoded in the dynamical state or attractor of the system. For instance, during training the network might be pushed into a new state (e.g., sustained expression of a set of genes) such that it will respond differently in the future. Essentially, the network’s trajectory carries a imprint of the pairing experience. This is plausible: even simple chemical systems can have hysteresis or multistability that gives them a form of memory. However, it’s a different kind of learning than, say, a neural network that can generalize or gradually adjust weights. The GRN’s “learning” might be more like flipping a switch to a new mode of behavior. It works for classical conditioning because the repeated co-stimulation drives the network into a state where the CS pathway gains influence (maybe via intermediates that remain active). This is an important distinction – the GRN doesn’t learn by incrementally updating a stored representation, it learns by transitioning into a new dynamical regime. The authors acknowledge this by saying the trained vs. untrained network are identical if you only look at parts; only the whole pattern is different. Some purists might argue this stretches the definition of learning. Nevertheless, from a behaviorist viewpoint, the network’s input-output relationship changed as a function of experience, which fits a broad definition of learning (adaptation in response to stimuli). The unconventional nature of GRN learning is actually part of the point – it shows how nature might achieve memory in biochemical circuits without neurons.

2. Interpretation of Causal Emergence Increases – Does higher Φ truly mean the agent is more unified or “more conscious”? While IIT proponents would lean that way, others caution that integrated information measures can be tricky. For instance, certain complex but functionally idle systems can score high on integration simply due to many interconnections, even if they aren’t doing anything meaningful. Levin’s data interestingly showed random networks had relatively high causal emergence at baseline. That could be because random densely connected networks have many pathways for interactions (hence more integration), but that integration might be chaotic or purposeless. The biological networks started with lower Φ but then gained more from training, ending up more integrated in a purposeful way. This suggests that integration gained through learning is not the same as integration due to random complexity – the former might correlate with meaningful, functional coherence, whereas the latter might reflect a tangled web. The fact that the increase is much larger in biological networks hints that evolution shaped these networks to be poised for meaningful integration when stimulated. An alternative interpretation could be: learning doesn’t create integration from nothing; rather, it selects or amplifies pre-existing potential integration. In other words, the capacity for emergent coordination was latent in the network’s structure, and the training brought it forth. If a network had zero latent integration potential, no amount of stimulation would conjure an emergent whole. Thus, one might say evolution “favored networks that can become more integrated when learning” – a nuanced difference from saying evolution favored high integration per se.

3. Cases of No Increase or Decrease – The two networks that did not show increased Φ despite learning are quite intriguing. They suggest that learning per se doesn’t guarantee more integration; it depends on how the network implements the learned response. If a network can accomplish the associative task using a localized subnetwork without needing global coordination, then the emergent whole doesn’t get stronger. This provides a counterpoint: sometimes learning might even specialize parts of the system in a way that reduces collective involvement. For example, perhaps one network had a dedicated pathway linking CS to response that took over, leaving the rest of the network less engaged (hence a drop in overall integration). This resembles how in larger brains, learning can either integrate or automate tasks – some skills, once learned, become localized in a module and free the rest of the system. So an alternate hypothesis is that there are multiple “modes” of learning’s effect on a system: integrative learning (which recruits more of the system into a coherent whole) versus segregative learning (which confines the change to a subset, potentially lowering overall integration). Levin’s five patterns might reflect this spectrum. The “deflating” pattern (if we interpret the name correctly) could correspond to networks whose Φ actually goes down after an initial rise, maybe indicating a segregative adaptation. This perspective adds that being more integrated is not always better for a system’s function; sometimes modular responses suffice or are preferable. Levin’s classification finding that these patterns correlate with different biological categories supports the idea that different networks (serving different biological roles) have different optimal learning modes. A network controlling a simple reflex might benefit from localized learning (keep it simple, don’t involve everything), whereas a network governing a complex developmental decision might need global integration (the whole cell’s state reorients).

4. Relevance to Actual Biology – While the models are based on real biological pathways, one wonders how this translates to living cells. In a cell, gene regulatory networks operate amid noise, with many parallel processes. Would a cell’s GRN actually undergo something like associative conditioning with real stimuli? Possibly – cells often integrate signals (say, chemical cues) in ways that could exhibit conditioning (one pathway primes another). There has been experimental evidence of adaptive responses at the cellular and molecular level that resemble simple learning (e.g., immune cells adapting to repeated stimulations, slime mold habituation, etc.). Levin’s work provides a theoretical framework to look for increased integration in cells that have been “trained” by their environment. If measurable, this could be revolutionary: it means we might detect a kind of proto-mind ignition in cells – for instance, does a neuron’s intracellular network become more integrated after it forms a memory at the synaptic level? Or in development, when a cell commits to a fate (a kind of learning from positional signals), does its internal network integration jump as it becomes a unified “self” (like a muscle cell identity)? These are speculative, but the paper does mention implications for health and disease. For example, cancer could be seen as a breakdown of multicellular integration – Levin elsewhere likens cancer to cells reverting to a selfish, less integrated state (a “dissociative identity disorder” of the cellular collective). If learning tends to increase integration, perhaps therapies could “train” cell networks to re-integrate, or conversely, maybe uncontrolled growth is like learning gone wrong (or learning in the wrong subnetwork only). The point is, bridging this back to real biology will be the next challenge. The in silico results are compelling as a proof of concept; demonstrating in vivo that stimuli can enhance a tissue’s integrated information (and maybe improve function) would really cement the claim.

5. Metrics and Theories – One potential critique lies in the reliance on ΦID as the metric. IIT and related measures have their critics – they can be computationally intensive, sometimes yielding counterintuitive results (like splitting a system can in theory increase Φ under some conditions). Erik Hoel’s causal emergence measure, which is related, tries to avoid some pitfalls by focusing on effective information at macro vs micro scales. Levin co-wrote a paper with Hoel (as cited in the blog) to apply these ideas. Still, any single metric is but an operationalization of a concept. Future work might test other indicators of integration (e.g., synergistic information, entropy of whole vs parts, etc.) to see if they concur that learning binds the system. Additionally, Integrated Information Theory brings philosophical baggage – it assumes that high integration corresponds to consciousness. Some philosophers like John Searle or others might argue that even a highly integrated gene network is not “conscious” in any qualitative sense, because it lacks the right architecture or phenomenology. Levin sidesteps claiming consciousness, but uses the language of “mind” and “selfhood” in a broader sense. Depending on definitions, critics could say he is anthropomorphizing molecular processes – calling it a “self” or “cognitive” might just be a metaphor. However, Levin’s position can be defended by noting he defines mind in terms of information integration and goal-directed behavior, not necessarily self-awareness. It’s a functionalist stance: if something behaves like an agent (even minimally), treat it as one for the sake of understanding and interaction. This pragmatic approach is common in AI and ALife fields – one speaks of agents, perceptions, and actions even in simple bots or programs. The payoff is in generating hypotheses (like new ways to communicate with unconventional intelligences or using these metrics in neuroscience).

6. Alternative Theoretical Frameworks – It’s worth noting connections or alternatives: Embodied cognition theories might read Levin’s results as evidence that cognition (here learning) always involves the coupling of system and environment. The GRN needed environmental interaction (stimuli) to exhibit a mind-like integration. This aligns with the enactivist idea that “knowledge” exists in the interaction. Indeed, Levin commented that the memory is in the relationship between the network and the observer (or trainer) – if you don’t probe it the right way, you won’t see the learned behavior. This relational view resonates with certain philosophical positions (e.g., R. D. Laing’s idea that “experience is not in the head” alone, or the sensorimotor contingency theory of perception). Another framework is predictive processing: one could ask if the GRN started modeling the stimulus input (predicting the US when CS appears). If so, learning increased its integration because the network began internally coordinating to anticipate outcomes – which is somewhat analogous to how brains integrate when they form predictive models. While speculative, it shows the interdisciplinary fertile ground – tying integrative physiology to cognitive science concepts.

In light of these reflections, does Levin’s bold claim hold up? It largely does, in the sense that the data show a clear trend that learning and integration often go hand-in-hand. The link between “being” (as an emergent agent) and “learning” appears supported in this minimal context: to learn, the system had to momentarily become an integrated whole (during stimuli), and once learned, the system exhibited stronger whole-system properties thereafter. However, the relationship is not absolute or unidirectional – some systems may learn in a compartmentalized way, and extremely simple systems cannot maintain integration without input. So one must refine the narrative: learning can “make you more real,” but perhaps only if the learning engages the system globally. And beyond a certain complexity, an agent may already be “real” enough to learn on its own; additional learning might not dramatically change its integration (for instance, a mature human doesn’t become ontologically more real by learning a new fact, though one could argue their identity is enriched).

Levin’s work opens the door to alternate interpretations such as: Is learning just one of many processes that can increase causal emergence? Evolution itself, or development, might also do so by wiring parts into an integrated whole (indeed, brains develop to integrate more areas over childhood – one could measure Φ growth with age). Perhaps learning is a microcosm of evolution’s effect on networks: a quick way to reconfigure dynamics that evolution does over generations. This raises a parallel: evolution “learns” (via selection) and biological organisms learn; both may serve to increase the coherence of agent-like structures – one over phylogenetic time, the other over individual time. Such an analogy might be drawn as an alternative narrative: learning as the continuation of integration-increasing processes that began with self-organization and evolution. Levin hints that this property is favored by evolution, so indeed there is a bridge between life’s origin of multicellular integration and an individual’s cognitive tuning.

Finally, one might ask from a metaphysical angle: Are we conflating epistemology with ontology? When we say “the system becomes more real,” is it that the system itself gained actuality, or that our ability to describe it as a unified agent improved? Causal emergence metrics are, after all, tools for our understanding. A hardcore skeptic might say: the molecules are doing what they always do, we just found a clever way to describe them as a collective. From that stance, the only thing that changed is that after training we as observers can compress the description of the system (since it behaves in a more coordinated way). There is merit to that view: emergence metrics essentially capture how much the system’s dynamics allow a simpler, higher-level description. If learning increases that, one could say the system became easier to think of as one thing. Does that equal becoming “more real”? Perhaps not in an ultimate sense, but in a pragmatic sense, yes – it’s more agent-like to us. Levin’s argument would be that this is not just epistemic (in our head); the system genuinely has new causal powers after learning, which can do work in the world (e.g., trigger responses it couldn’t before). That is an ontological difference insofar as the network can now cause effects (like responding to a bell stimulus) that previously would never happen without the whole network’s coordination. This debate touches on classic issues of whether emergent properties are “real” or just in the model. Levin, siding with causal emergentism, treats them as real – and his evidence gives that stance some quantitative backbone.

Conclusion

Michael Levin’s “Learning to Be” presents a daring synthesis of empirical results and philosophical insight. By marrying Pavlov with molecular biology, he and his colleagues demonstrate that even a minimalist model of life can exhibit a formative aspect of mind: it can learn, and in doing so, strengthen its identity as an integrated agent. The work’s central finding – that learning increases causal emergence – offers a fresh perspective on the age-old question of what makes something a unified self. It suggests that minds are not all-or-nothing phenomena, but come in degrees that can be enhanced by experience. This has profound implications. It means the gap between mind and matter might be bridged by information and learning: when matter learns, it inches across into the realm of mind. The gene networks, though devoid of neurons, displayed a primitive version of what brains do – they wove separate signals into a coherent memory, and thereby “came alive” as wholes (if only fleetingly and faintly).

Our analysis has explored how Levin’s thesis connects to broader scientific and philosophical domains. It aligns with integrated information theory in quantifying the emergence of a unified “being,” yet it also raises questions about the scope of those measures. It extends ideas from systems theory about emergence and downward causation, showing a concrete example where a higher-level cause (the associative memory) materializes from interactions. It resonates with embodied and distributed cognition in illustrating that interaction (with an environment or trainer) is essential to light up a minimal mind. And it touches on the ontology of identity, provocatively casting learning as the engine of being. The notion that an entity can learn itself into greater existence compels us to rethink how we define life and mind. Perhaps a baby “becomes real” to itself through early sensorimotor learning; perhaps an AI might one day attain a sense of unified self through iterative learning cycles.

Of course, many challenges and questions remain. Not everyone will be comfortable speaking of a gene network in the same breath as a mind. The philosophical leap from integrated information to consciousness is contentious – Levin wisely leaves an interpretative gap there, which future research and debate must fill. We should also ask: are there limits to this principle? Can learning ever decrease the integrity of a self (for instance, traumatic learning fragmenting a psyche)? Can a system become “too integrated” (losing modularity and flexibility)? The current work provides a snapshot in a very controlled setting; living minds are messier.

Nevertheless, Levin’s work is a potent example of “bold intellectual exploration” – it ventures into territory where the lines between disciplines blur. It invites alternate interpretations and will likely spur new experiments (perhaps measuring EEG integrated information before and after humans learn a task, or training chemical reaction networks in the lab). In challenging the narrative, we considered whether learning is unique or just one facet of a bigger story of complexification. Even if one remains agnostic about the Pinocchio-esque conclusion, one cannot deny the value of the questions raised. What does it mean to be a “real” agent? Could a humble network of chemicals share something in common with our conscious minds on a sliding scale? Levin’s results urge us to keep an open mind – and open minds, after all, are those willing to learn. In the spirit of the article, perhaps our own collective intelligence becomes more real as we learn from such paradigm-challenging ideas. The journey of learning to be has only just begun, and it promises to deepen our understanding of life, mind, and the mysterious emergence of “self” from the fabric of the world.

Sources:

- Michael Levin, “Learning to Be: how learning strengthens the emergent nature of collective intelligence in a minimal agent,” January 19, 2025.

- Federico Pigozzi, Adam Goldstein, Michael Levin, “Associative conditioning in gene regulatory network models increases integrative causal emergence,” Communications Biology 8:1027 (2025).

- Erik Hoel et al., on causal emergence and ΦID framework.

- Commentary and discussion on Levin’s blog (thoughtforms.life).

- Integrated Information Theory and consciousness measures.

- Philosophical context on emergentism and agency.

AI Reasoning

ChatGPT o3 Pro