Table of Contents

Ternary systems—especially balanced ternary with digits $-1,0,+1$—sit at the crossroads of number representations, multi‑valued logic, device physics, communications, and modern machine learning.

This essay builds a unified picture: (i) the mathematics of radix‑3 with signed digits and why base‑3 is often “economical,” (ii) circuit and device routes to practical three‑state logic, (iii) how three‑level signaling already underpins parts of today’s networks, (iv) why $[-1,0,+1]$ neural nets and 1.58‑bit LLMs matter, and (v) where three‑valued semantics (Kleene/Łukasiewicz/LP) clarify reasoning under uncertainty.

Along the way we revisit Setun (1958), the most complete historical realization of a ternary computer, and connect to current device breakthroughs (tunneling‑based T‑CMOS) that re‑open the hardware path.

We close with a research agenda that treats ternary not as a curiosity but as a design axis: radix, algebra, and physics co‑optimized for efficiency, robustness, and meaning. (Wikipedia, American Scientist, Yonsei University)

1) What exactly is “balanced ternary,” and why $[-1,0,+1]$?

A positional numeral system with base 3 usually uses digits $\{0,1,2\}$. Balanced ternary instead uses digits $\{-1,0,+1\}$, yielding symmetry around zero, a built‑in sign, and unusually clean arithmetic properties. Knuth famously called it “perhaps the prettiest number system of all,” noting, among other things, that in balanced systems truncation equals rounding to nearest—a property that simplifies numerical algorithms and error analysis. (Wikipedia, Redgate Software)

Immediate consequences.

- Sign integration: no separate minus sign; the leading non‑zero trit carries the sign.

- Carry behavior: with signed digits many carries cancel; addition tables are sparse in carries versus unbalanced ternary.

- Shifts: left/right shifts multiply/divide by 3.

- Exact negatives: subtraction is just “flip the sign” of each trit.

These features make $[-1,0,+1]$ attractive not just aesthetically but algorithmically (see §3 and §7).

2) Radix economy: why base‑3 so often wins (and when it doesn’t)

To represent up to $N$ distinct values using radix $r$ with width ww digits, wev require $r^w \ge N$. If we treat the cost as the product $r \cdot w$ (alphabet size × string length), minimizing cost under $r^w=N$ yields $r=e$ in the continuous limit; among integers, $r=3$ is the minimizer. This is the classic radix economy argument (Hayes’ “Third Base”). In practice: for $N\approx 10^6$, binary needs 20 bits, ternary needs 13 trits. The conclusion: base‑3 tends to minimize “symbols × positions” under simple, neutral assumptions. (American Scientist)

Caveats. Not all cost models are well captured by $r\cdot w$. Power/noise margins, device count, fan‑out, interconnect, and algorithmic structure can tilt the optimum. There is serious debate about the universality of “ee is best,” but the base‑3 advantage for many economy metrics is mathematically sound and widely cited. (Wikipedia, Mathematics Stack Exchange)

3) Arithmetic with $[-1,0,+1]$: signed digits and carry‑free ideas

Balanced ternary intersects a broader theme: signed‑digit representations. Avizienis showed that allowing digits in $\{-1,0,+1\}$ (in any radix) enables bounded carry propagation and fast addition; in binary this leads to the Non‑Adjacent Form (NAF) used in cryptography, which guarantees minimal Hamming weight and eliminates adjacent non‑zeros. These ideas generalize naturally to base‑3 with balanced digits, reducing switching activity and simplifying add/sub pipelines. (Semantic Scholar, Wikipedia)

4) Logic with three values: from engineering pragmatics to semantics

Engineering semantics. With three levels we can assign $\{-1,0,+1\}$ to “inhibitory/neutral/excitatory,” or to “low/idle/high,” a pattern used in telephony (AMI) and modern PAM‑3 links (see §6).

Philosophical semantics. In reasoning, there are standard 3‑valued logics: Kleene K3 (truth, falsity, and “undefined”), Łukasiewicz Ł3 (a different conditional), and Priest’s LP (paraconsistent: allows “both true and false” without explosion). These provide formal truth tables for ¬,∧,∨,→\neg,\wedge,\vee,\to, enabling logics that tolerate indeterminacy or contradiction—conceptual cousins of “−1/0/+1” signaling. (Open Logic Project Builds, BPB US W2)

5) History’s proof‑of‑concept: Setun (1958) and Setun‑70

The Setun computer (Moscow State University, 1958) implemented balanced ternary in hardware: compact, power‑efficient, and cheap to build for its era. About 50 units were produced; Setun‑70 (1970) introduced a two‑stack architecture and hardware support for structured programming. The project was ultimately discontinued for nontechnical reasons—momentum of the global binary ecosystem trumped its technical merits—but it remains the clearest system‑level validation that ternary computing is feasible and, within its context, competitive. (Wikipedia, IFIP Open Digital Library)

6) Device physics: why ternary was hard—and why it’s getting easier

Classic hurdle: Stable, well‑separated thresholds get harder as you increase logic levels; noise margins shrink and static power can rise. That’s why binary, with its generous noise margins and Boolean synthesis theory, beat MVL historically.

New route: tunneling‑based T‑CMOS. A 2019 Nature Electronics paper demonstrated wafer‑scale ternary CMOS where the third state is produced by a controlled band‑to‑band tunneling current at low voltage (≈0.5 V). This single‑threshold approach avoids the fragile multi‑threshold stacks that plagued earlier MVL proposals and showed integrated ternary inverter and latch functions with good variation tolerance—bridging ternary logic to standard CMOS flows. (Yonsei University)

Other active directions. TFET‑based ternary inverters; ferroelectric‑gate FETs enabling T‑CMOS via negative‑capacitance effects; and hybrid CMOS/memristor schemes that exploit multi‑level resistive states. These are early but promising, with several groups in Korea and elsewhere reporting progress. (Inha University, MDPI)

7) Memory & in‑memory computing: three (or more) levels are already here

Even if logic stayed binary, memory has long been multi‑level: MLC/TLC/QLC flash and phase‑change memory (PCM) store several bits per cell. Resistive RAM (ReRAM) and PCM both support multi‑level conductance and are leading substrates for in‑memory computing (IMC), where matrix–vector ops are performed in place. Recent work explores ternary stateful logic and ternary encoders/decoders on RRAM crossbars—directly embodying $[-1,0,+1]$ in physics. (PMC, umji.sjtu.edu.cn, ScienceDirect)

8) Communications: we already ship “trits” over copper

Modern links often trade fewer voltage swings for more levels per symbol. Automotive Ethernet 100BASE‑T1 uses PAM‑3: three amplitude levels (commonly mapped to $-1,0,+1$), proving robust in noisy vehicular environments. PCIe 6.0 doubled per‑lane data rate by moving to PAM‑4; industry analyses highlight ternary PAM‑3’s better noise margin relative to PAM‑4 in certain long‑reach industrial settings. Three‑level signaling thus isn’t hypothetical—it’s shipping. (cdn.teledynelecroy.com, Teledyne LeCroy, IEEE 802)

9) Information theory and thermodynamics: from bits to trits

Information: one trit carries $\log_2 3 \approx 1.585$ bits. In channels limited by SNR, capacity depends on bandwidth and S/NS/N (Shannon–Hartley). Multi‑level signaling increases bits/symbol, but thresholds compress; coding and equalization are crucial. (Wikipedia, Stanford University)

Thermodynamics: Landauer’s bound generalizes: erasing one symbol from an alphabet of size dd dissipates at least $kT\ln d$. A ternary “erase” thus costs $kT\ln 3$ vs $kT\ln 2$ for a bit—exactly proportional to information content. This is a floor; practical systems are many orders above it, but it clarifies energetic fairness between bits and trits. (Worrydream)

10) AI with $[-1,0,+1]$: from ternary CNNs to 1.58‑bit LLMs

Deep nets are multiplication‑heavy and energy‑hungry; quantization reduces cost. Ternary Weight Networks (TWN) and Trained Ternary Quantization (TTQ) demonstrated that constraining weights to $\{-1,0,+1\}$ with learned thresholds and per‑channel scales preserves accuracy on vision tasks while slashing multiplications (turning many MACs into adds and sign flips). These results anticipated the current wave of ternary LLMs. (ResearchGate, arXiv)

In 2024–2025, BitNet b1.58 showed that large Transformers trained natively with ternary weights approach FP16 performance while delivering major gains in memory, latency, and energy—coining the term 1.58‑bit LLMs (since $\log_2 3\approx 1.58$). Open‑source 2B‑parameter variants and systems papers now target efficient inference kernels and training recipes for ternary operators. Hardware co‑design—SIMD lanes and SRAM optimized for $\{-1,0,+1\}$—is the next obvious step. (arXiv)

Why $[-1,0,+1]$ helps in learning:

- Sparsity via zeros reduces energy and memory traffic.

- Sign symmetry mirrors excitatory/inhibitory synapses.

- Quantization‑aware training learns thresholds and scales to minimize the ternarization error in task loss, not in a surrogate L2L_2 metric.

11) Three‑valued reasoning for AI safety and tooling

Practical systems benefit from representing unknown/undefined distinctly from false: e.g., database NULL logic is K3‑like; safety monitors may need a paraconsistent LP‑like stance where isolated contradictions don’t explode the proof system. As AI systems become tool‑using and self‑reflective, three‑valued semantics are a principled way to handle partial evidence and disagreement—an epistemic counterpart to $[-1,0,+1]$ compute. (Wikipedia)

12) Programming models: what Setun‑70 got right

Setun‑70 hard‑wired concepts we now associate with structured programming and stack machines—two stacks (data/control), short instruction sets, and direct support for block structure (DSSP later emulated this on binary machines). This reminds us that ISA design and numeral system co‑evolve; the gains of balanced ternary grow when the entire stack (ISA, compiler, runtime) leans into signed‑digit arithmetic and three‑state control. (IFIP Open Digital Library)

13) Why the world stayed binary—and why the door re‑opened

Binary won for contingent reasons that became structural: early transistor noise constraints, elegant Boolean algebra and CAD, and a compounding ecosystem advantage. Attempts to re‑introduce ternary in software without hardware support (e.g., early IOTA’s “trits/trytes”) largely foundered on practicality and security tooling; the project later pivoted to binary encodings. The lesson is not that ternary lacks merit, but that co‑design matters: devices, EDA, compilers, and APIs must align. (Cointelegraph)

14) Open problems and plausible breakthroughs

- Device‑to‑EDA pipeline for T‑CMOS. Standard‑cell libraries for ternary inverters, NAND/NOR, and majority gates; mixed‑radix datapaths; variation‑aware synthesis; and robust timing/noise models for three levels. Early demonstrations show feasibility; the missing pieces are design kits and PDK integration. (Yonsei University)

- Arithmetic libraries in $[-1,0,+1]$. Carry‑sparse adders, borrow‑free subtractors, and ternary Booth/Wallace variants; fixed‑point with rounding=truncation guarantees; exact integer transforms that exploit sign symmetry. (Wikipedia)

- IMC for ternary AI. Map ternary weights/activations onto RRAM/PCM with three stable conductance states, closing the loop from model to silicon, and benchmark end‑to‑end energy/accuracy. (PMC)

- Compiler/runtime support. Intrinsics for ternary dot‑products; sparse‑aware schedulers that skip zeros; kernels that exploit $\{-1,0,+1\}$ to replace MUL with ADD/SUB/bit‑twiddles.

- Qutrit‑aware algorithms. Where quantum hardware exposes stable three‑level states, embed classically ternary pre/post‑processing and error models; investigate whether higher‑dimensional codes plus ternary classical control reduce system‑level overheads. (PMC, Nature)

- Semantics‑aware ML. Explore training objectives where zero has explicit “abstain” semantics, and decision‑theoretic pipelines that propagate three‑valued uncertainty instead of collapsing early to Boolean.

15) Risks, limits, and where ternary doesn’t help

- Noise margins: three levels cut per‑state margins (vs two). Careful device physics (tunneling, negative‑capacitance, threshold switches) or coding is required; otherwise error rates erase theoretical radix gains. (Wiley Online Library)

- Ecosystem inertia: CAD tools, IP libraries, and verification today assume Boolean. Migration costs can dwarf constant‑factor wins.

- Algorithmic neutrality: Complexity classes don’t change with radix; asymptotic speedups require exploiting structure (e.g., sparsity, sign‑symmetry), not just changing base.

- Security/tooling: As the IOTA experience showed, adopting ternary encodings atop binary platforms complicates analysis and can introduce attack surface if cryptographic and verification ecosystems aren’t aligned. (Medium)

16) Synthesis: a design philosophy for $[-1,0,+1]$

When to choose ternary:

- Representation‑bound tasks where radix economy and sign symmetry reduce state or logic depth.

- Compute‑in‑memory flows where devices naturally support 3+ levels.

- ML workloads where zeros can be abundant and useful, and ternary kernels can replace multiplications.

- Comms where channel conditions favor PAM‑3 over PAM‑4 for robustness at target reach/speed.

How to choose ternary well:

- Co‑design devices, logic families, arithmetic libraries, compilers, and models.

- Treat 0 as first‑class: idle state, abstain semantics, or structured sparsity.

- Embrace balanced digits: exploit rounding=truncation and symmetric error models.

Appendix A — A compact balanced‑ternary toolkit

Digits and weights. A value xx has expansion $x=\sum_{k} d_k 3^k$ with $d_k\in\{-1,0,+1\}$.

Addition (carry‑sparse): Add digit‑wise; if a trit sum $s\in\{-3,-2,+2,+3\}$, emit $s-3$ or $s+3$ and carry $\pm1$ accordingly. Carries are rarer than in unbalanced ternary.

Negation: flip signs of all trits.

Rounding: truncating the fractional ternary expansion equals rounding to nearest (ties handled symmetrically), simplifying fixed‑point arithmetic and interval analysis. (Wikipedia)

Appendix B — Radix‑economy derivation (sketch)

With $r^w=N$ and cost $C=r\cdot w$, substituting $w=\log_r N=\ln N/\ln r$ gives $C(r)=r\,\ln N/\ln r$. Minimizing $r/\ln r$ over $r>1$ yields optimum $r=e$; among integers, $r=3$ minimizes $r/\ln r$, making base‑3 the discrete optimum for this cost model. (American Scientist, Wikipedia)

References (selected)

- Balanced ternary & Knuth’s remarks: Wikipedia overview (with rounding=truncation) and external quotations. (Wikipedia, Redgate Software)

- Radix economy: Hayes, American Scientist (“Third Base”); optimal‑radix notes. (American Scientist, Wikipedia)

- Setun & Setun‑70: historical summaries and technical papers. (Wikipedia, IFIP Open Digital Library)

- T‑CMOS (tunneling‑based, wafer‑scale demonstration): Nature Electronics 2019. (Yonsei University)

- Ternary NNs & LLMs: TWN; TTQ; BitNet b1.58 and open‑source successors. (ResearchGate, arXiv)

- Multi‑level memory/IMC: PCM/ReRAM for multi‑level and ternary logic. (PMC, umji.sjtu.edu.cn)

- Three‑level signaling in practice: automotive Ethernet PAM‑3; PAM‑3 vs PAM‑4 considerations. (cdn.teledynelecroy.com, IEEE 802)

- Three‑valued logics (Kleene/Łukasiewicz/LP): Open Logic Project primers; SEP overview. (Open Logic Project Builds, Stanford Encyclopedia of Philosophy)

- Landauer’s bound (generalized to kTlndkT\ln d): original paper and summaries. (Worrydream)

Epilogue: where the “truth‑seeking” lives

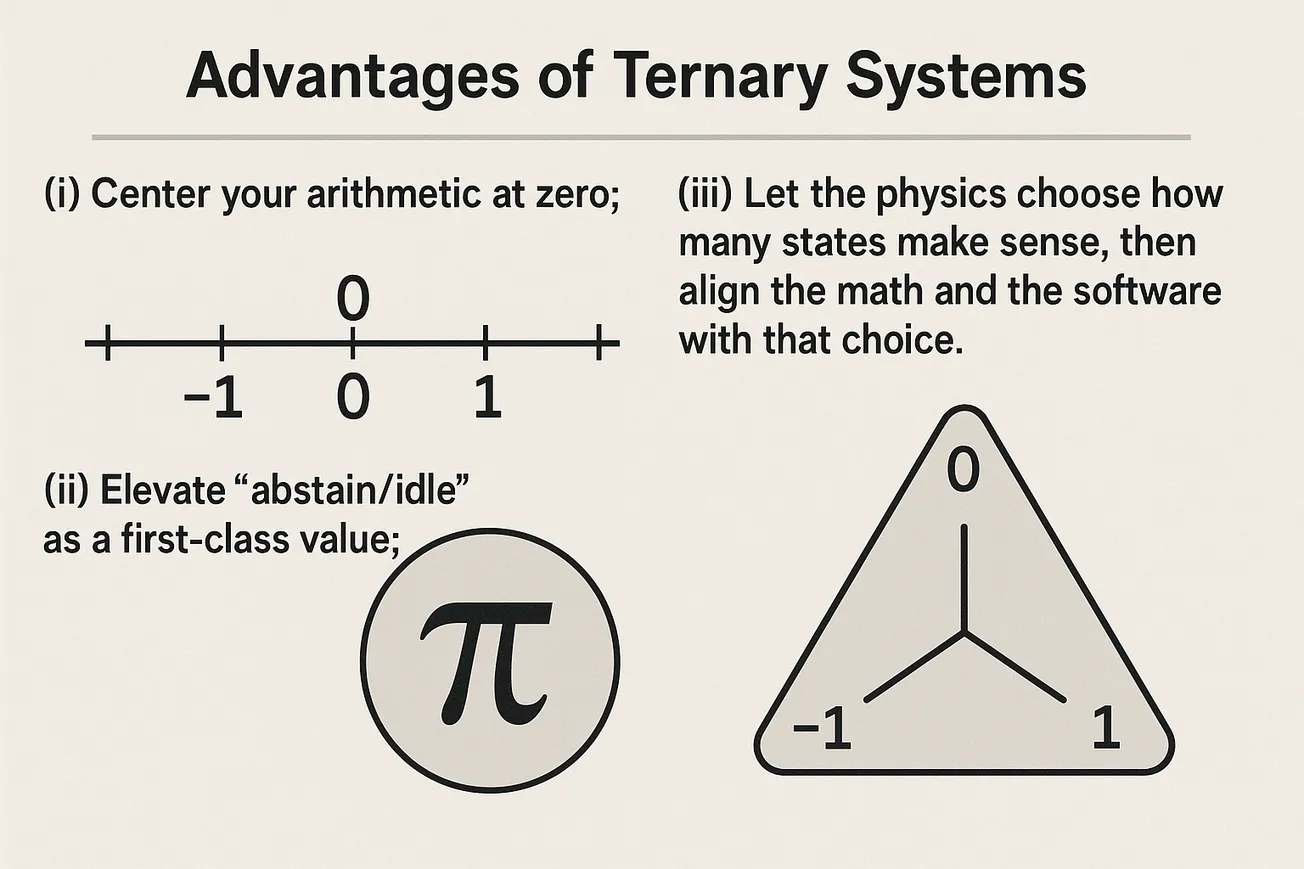

Balanced ternary is not mystical; it is a design choice with deep symmetry. Its most enduring contributions are conceptual: (i) center your arithmetic at zero; (ii) elevate “abstain/idle” as a first‑class value; (iii) let the physics choose how many states make sense, then align the math and the software with that choice. In the near term, three‑level devices, memories, links, and models already coexist with binary logic and often improve it. If the tunneling‑based path keeps maturing and ternary standard cells enter foundry kits, the case for full $[-1,0,+1]$ compute will be empirical, not polemical—as it should be. (Yonsei University)

AI Assistance

ChatGPT 5Pro

Reasoning

Gathering comprehensive context

I’m gathering information on ternary systems, including balanced ternary and T-CMOS, and ensuring thorough coverage without revealing step-by-step reasoning.

Examining ternary systems

I’m exploring balanced ternary operations, quantum computing, information theory, and memory design, including references to IBM qutrit circuits, multilevel flash, and invigorating analog vs digital multi-level communications.

Investigating implications

I'm examining algorithmic effects, AI hardware, and resistive RAM. Referencing Hayes 2001, I discuss base conversion, balanced ternary benefits, and ternary content addressable memory.

Gathering resources

I collected various sources including Hayes' "Third Base", Setun PDF, and Knuth's work on balanced ternary.

Citing credible sources

Considering citing TAOCP Vol. 2 or the SystemsTalk page (page 190) and Wikipedia on Balanced Ternary referencing Knuth. This ensures accurate credits.

Examining hardware advancements

I'm mapping out T-CMOS and MDPI paper, multi-level NAND (TLC and QLC) for noise margins, and MLT-3 encoding for communications.

Investigating many-valued logicsI'm delving into Kleene and Łukasiewicz three-valued logic, focusing on mapping values and semantics of unknowns.

Synthesizing and comparing

Balancing ternary's efficiency with Landauer's principle shows it needs kT ln 3 energy to erase a trit. This means trits are more energy-efficient than traditional bits.

Considering hardware options

I’m thinking about resistive RAM memristors to handle ternary states, examining terms like "ternary memristor" or "multi-level memristor triple-state."

Expanding my research

I’m curious about TFET-based ternary logic designs and memristor-based devices. Also tackling EDA flow challenges with standard cells and libraries.

Mapping semantics

I'm excited about mapping 'ternary' semantics to [-1, 0, +1]. Also, I'm digging into quantum qutrits research.

Integrating concepts

Sifting through balanced ternary and its implications in digital arithmetic, AI hardware, and data structures like ternary search trees.

Highlighting key methodsI’m compiling bit-serial operations, XNOR, and ternary networks' energy efficiencies. Highlights include energy savings in RISC-V and analog comparators.

Examining different theories

I'm mapping out Cantor set interplay with digits 0 and 2, considering balanced and unbalanced variants, and noting three-way decision theory with -1/0/+1 semantics for normative choices.

Evaluating options

Considering radix economy and hardware overhead. Trit-coded communications and ternary Golay code are under review. Progressing through these technical areas.

Gathering references

I'm pulling together citations from XNOR-Net 2016, TWN, T-CMOS 2020, and Hanyang 2021. Plus, I'm looking into category theory and three-way comparisons.

Evaluating programming languages

I'm thinking through programming languages and a balanced ternary for IOTA's Tangle technology. This approach promises intriguing potential.

Organizing the essay

I created a detailed outline, covering diverse fields including mathematics, logic, architecture, hardware, algorithms, AI, and philosophy, highlighting balanced ternary systems and their applications.

Choosing citations

I confirmed 2021/2024/2025 papers, balanced ternary sources, and T-CMOS. Emphasized avoiding quoting, citing within paragraphs, and ensuring brevity.

Mapping the essay

I clarified fact definitions and integrating varied elements to achieve a balanced tone, avoiding overly complex language while considering rhetorical embellishments for a PhD-level persuasion.

End.