Transliteration is not translation—but it is the keystone that makes reference possible, learning feasible, computation effective, and justice in naming attainable.

When we preserve form carefully, we create the conditions under which meaning can be found.

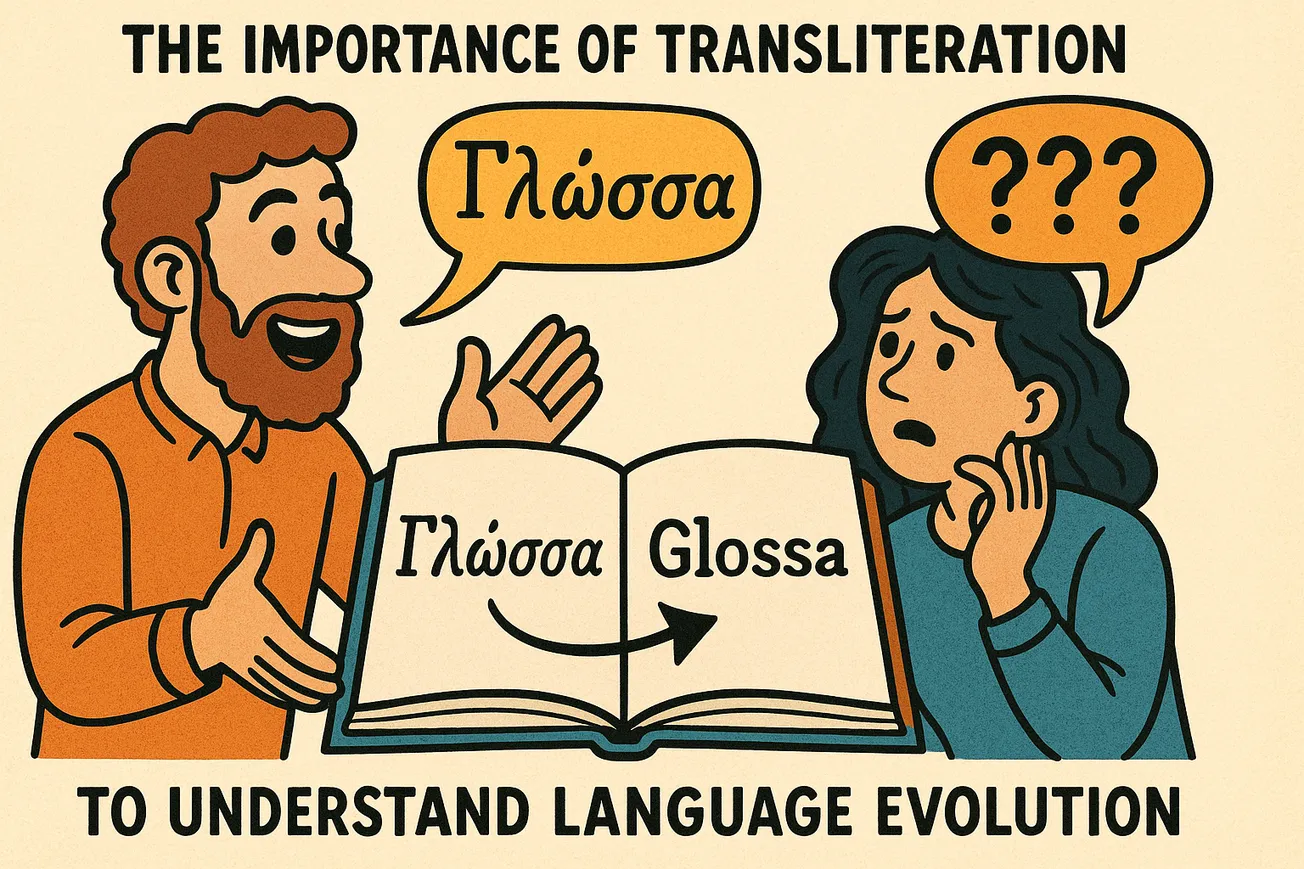

Transliteration is often dismissed as a convenience—a way to “spell foreign words with our letters.”

This dissertation argues the opposite: transliteration is a foundational technology of understanding.

It is not translation, nor is it merely phonetic transcription; it is a disciplined mapping between writing systems that preserves form to make meaning tractable.

I develop:

(i) a formal account of transliteration as an information-preserving transduction;

(ii) a cognitive account connecting graphemic form to lexical access;

(iii) a sociopolitical account of names, power, and identity; and

(iv) computational implications for search, knowledge integration, and fairness.

Through cross‑script case studies (Arabic, Cyrillic, Devanagari↔Nastaliq, Greek, Hebrew, Chinese, Japanese, Korean), I show that transliteration is the minimal bridge by which we can stably refer across scripts, align distributional semantics, and ultimately unlock translation. The central thesis: transliteration is not translation—but without it, large parts of language remain unreachable, unsearchable, and, in practice, unintelligible.

Keywords: transliteration, transcription, translation, grapheme, orthography, invertibility, finite‑state transduction, orthographic depth, cross‑lingual IR, knowledge graphs, names, fairness.

1. Introduction: Why form matters for meaning

Translation converts meanings across languages. Transliteration converts forms across scripts. These are not interchangeable. When we translate Россия as “Russia,” we pass through a semantic door; when we transliterate it as Rossiya, we build a stable handle for reference, retrieval, and learning before semantics are known. That handle does essential work:

- Reference: Proper names, technical terms, and rare lexemes often have no immediate translation. Transliteration lets us point to the same entity across systems (e.g., חנוכה → Hanukkah/Chanukah).

- Learnability: Beginning readers and second‑language learners need a graphemic scaffold that approximates pronunciation and exposes morphological structure (e.g., Greek φιλοσοφία → filosofía preserves morphemes filo- ‘love’ + -sofía ‘wisdom’).

- Computation: Cross‑script search, de‑duplication, and knowledge graph alignment begin with form‑preserving mappings. Without a transliteration layer, “Beijing/北京,” “Khayyam/خیّام,” and “Tchaikovsky/Чайковский” can remain isolated islands in data.

This work claims that transliteration is an epistemic technology: it preserves just enough form to make unknown words queryable, comparable, and teachable. Only then can translation—grounded in meaning—complete the job.

2. Conceptual clarifications

2.1 Transliteration vs. transcription vs. translation

- Transliteration: grapheme‑to‑grapheme mapping between scripts (e.g., Cyrillic д → Latin d), typically systematic and (ideally) invertible.

- Transcription: sound‑to‑grapheme mapping (phonemic or phonetic), often language‑specific and not invertible (e.g., Mandarin 北京 → Běijīng via Pinyin).

- Translation: meaning‑to‑meaning mapping (e.g., чёрный хлеб → “rye bread”), indifferent to form.

Transliteration preserves orthographic structure; transcription preserves phonological structure; translation preserves semantic structure. In practice, systems mix these (e.g., “Hepburn” romaji is a transcriptional romanization; IAST for Sanskrit is mainly transliteration).

2.2 Script types and consequences

- Alphabet (Greek, Latin): symbols for consonants and vowels.

- Abjad (Arabic, Hebrew): consonant‑dominant; short vowels variably marked, affecting invertibility.

- Abugida (Devanagari): consonant bases plus vowel diacritics.

- Syllabary (Kana): symbols approximate syllables.

- Morphosyllabic/logographic (Han characters): morpheme‑centered; transliteration of characters is ill‑defined, so systems adopt phonemic transcription for readings (e.g., Pinyin).

These typological facts constrain what “form preservation” can mean and how much diacritic “budget” is needed to avoid information loss.

3. A formal account: transliteration as information‑preserving transduction

Let Σs\Sigma_s and Σt\Sigma_t be source and target grapheme alphabets, and f:Σs∗→Σt∗f: \Sigma_s^* \to \Sigma_t^* the transliteration function.

3.1 Desiderata

- Determinism: same source string → same output.

- Stability: invariant across contexts unless rule‑governed (e.g., position‑dependent mappings).

- Invertibility (when feasible): existence of f−1f^{-1} up to diacritically encoded ambiguities.

- Transparency: visible correspondence between source segments and output segments.

3.2 Invertibility, entropy, and diacritics

- If ff is many‑to‑one (e.g., collapsing ш/щ/щ’ to sh), conditional entropy H(S ∣ f(S))>0H(S\,|\,f(S))>0; information is lost.

- Proposition (loss bound): To render an abjad‑to‑alphabet mapping (nearly) invertible, diacritic enrichment must encode at least the entropy contributed by underspecified vowels and ambiguous consonants. Practically: you need either (i) diacritics (e.g., ḥ, ḍ, ṣ), (ii) digraphs (kh, gh), or (iii) segmentation cues (apostrophes for glottal stop).

3.3 Finite‑state formulation

Transliteration can be realized as a Weighted Finite‑State Transducer (WFST) over graphemes. Advantages:

- Encodes context‑sensitive rules (e.g., Arabic sun‑letter assimilation).

- Allows invertible composition with a back‑transducer.

- Supports n‑best outputs where ambiguity is inherent (useful for search and OCR correction).

3.4 Evaluation metrics

- Back‑transliteration accuracy (BAcc): Pr{s^=s∣f(s)}\Pr\{\hat{s}=s \mid f(s)\}.

- Form faithfulness (FF): character‑level alignment F1 between source segments and output segments.

- Operationality (OP): improvements in downstream tasks (IR MRR, entity‑linking F1) when a transliteration layer is added.

- Learnability (LE): time‑to‑lexicalization for L2 learners given a transliteration scheme (measured in behavioral studies).

Triad objective: maximize λ1BAcc+λ2FF+λ3OP\lambda_1 \mathrm{BAcc} + \lambda_2 \mathrm{FF} + \lambda_3 \mathrm{OP}, subject to usability constraints (diacritics budget, ASCII compatibility).

4. Cognitive and hermeneutic payoffs

4.1 Orthographic depth and access

Languages differ in how tightly spelling tracks sound. Transliteration can shallow an orthography for L2 readers (e.g., Japanese long vowels おう/ō surfaced as macrons), supporting more reliable grapheme→phoneme mapping and faster lexical access.

4.2 Morphological transparency

A form‑preserving mapping exposes morpheme boundaries that transcription might blur. Compare Hebrew מלכויות → malkhuyot (morphs mlk ‘rule’ + -uy- + -ot plural) vs. a purely phonetic “malchuyot” that merges kh with ch inconsistently across dialects.

4.3 Hermeneutic humility

Transliteration postpones premature semantic commitments. It lets us cite before we interpret. For religious, legal, and technical texts, maintaining the form avoids injecting meaning not present in the original.

5. Sociopolitics, names, and fairness

5.1 Names as identity

Names are not merely labels; they are claims. Mappings like Київ → Kyiv vs. Kiev, or Мумбаи → Mumbai vs. Bombay, encode political alignment. Transliteration choices are normative acts.

5.2 The ethics of romanization defaults

Global systems privilege ASCII pipelines. When diacritics vanish (Ğül → Gul), identities flatten. Consequences: mis‑indexing in scholarly databases, mis‑pronunciation in public records, bias in hiring systems that match on romanized strings.

Principle of Respectful Reversibility: for names, prefer schemes that allow recovery of the original script or supply an authoritative canonical form alongside a display form.

5.3 Cross‑script equity in information access

Public search, legal traceability, and health records depend on stable strings. A robust transliteration layer reduces false negatives in background checks, increases recall in medical history linkage, and curtails disparate error rates for non‑Latin scripts.

6. Computational consequences

6.1 Cross‑lingual information retrieval (CLIR)

Query marhaban should match مرحبًا even when the database lacks transliterations. An IR system benefits from:

- On‑the‑fly transliteration of queries and index terms.

- N‑best lattices for ambiguous source segments.

- Fusion of transliteration and translation results, re‑ranked by semantic signals.

6.2 Entity resolution and knowledge graphs

Entities appear as Россия, Rossiya, Russia, Росія. Canonicalization requires a transliteration‑aware string similarity (edit distance under script mapping) coupled with language detection. Without it, graphs fragment.

6.3 OCR/ASR and code‑switching

Historical OCR on Gothic or Nastaliq scripts produces noisy graphemes. A WFST‑based transliteration normalizes forms into a common space where language models can repair errors. In speech‑heavy social media, users code‑switch and ad‑hoc transliterate (7abibi for ḥabībī); robust systems must model these community norms too.

6.4 Learning transliterators

- Rule‑based: explicit tables + context rules (transparent, maintainable).

- Grapheme seq2seq: neural models trained on aligned pairs (high coverage, needs data).

- Hybrid: rule lattice rescored by a neural model (best of both).

Data sources: bilingual gazetteers, parallel name lists, Wikidata labels, onomasticon corpora, mined transliteration pairs from aligned pages.

Evaluation: beyond accuracy, test fairness: is error equitably distributed across scripts and communities?

7. Case studies: trade‑offs in the wild

7.1 Arabic (abjad)

- مرحبًا → marḥaban/marhaban: macrons or underdots (ḥ) improve invertibility and pronunciation.

- Sun‑letter assimilation (al + shams → ash‑shams): encode or ignore? For search, provide both.

- Ta‑marbūṭa ة: ‑a(h) word‑final—marking helps back‑transliteration.

Design choice: a dual‑track system—(A) scholarly form with diacritics, (B) ASCII form (marhaban). Maintain canonical > display > search variants.

7.2 Hebrew (abjad with optional vowel points)

- חנוכה → Hanukkah/Chanukah: ח is [χ]/[ħ] ~ h/ch; community conventions matter.

- Dagesh doubling: kk in Hanukkah preserves gemination, useful for learners.

7.3 Russian (alphabet)

- Россия → Rossiya: reflects ии → iy. Rival systems may use Rossija.

- Чайковский → Chaikovsky/Tchaikovsky: French‑legacy forms persist. Policy: store canonical transliteration + alias spellings for recall.

7.4 Greek (alphabet with historical layers)

- φιλοσοφία → filosofía: transparent morphemes support etymology mining (e.g., cognate alignment with Romance derivatives).

- χ → ch vs. kh: choose one, provide alias rules for search.

7.5 Sanskrit/Hindi ↔ Urdu (Devanagari ↔ Nastaliq)

- किताब / کتاب → kitāb: a textbook case where transliteration unifies two scripts of one lexicon.

- Schwa deletion in Hindi (orthography writes inherent a): deciding whether to surface a impacts learnability vs. speech‑likeness.

7.6 Chinese

- Han characters lack a grapheme‑to‑grapheme Latin mapping. Hanyu Pinyin is transcription, not transliteration. Consequence: 北京 → Běijīng can’t be uniquely back‑transliterated to the characters without lexicon context.

- Design implication: treat Pinyin as an auxiliary script with tone marks for learners; for IR, index characters + pinyin to bridge Roman‑script queries.

7.7 Japanese

- Hepburn targets pronunciation for Anglophones (transcription); Nihon‑shiki is closer to kana structure (transliteration‑like).

- Long vowels: ō, ū vs. ou, uu vs. doubling. For pedagogy, macrons minimize ambiguity; for ASCII pipelines, accept ou/uu aliases.

7.8 Korean

- Revised Romanization vs. McCune–Reischauer: the former avoids diacritics, improving adoption but increasing homography. Maintain both in canonicalization layers.

8. A methodology for “unknown unknowns”: probing with transliteration stress tests

To discover failures before they harm users:

- Ambiguity sets: Construct minimal pairs that collapse under a given scheme (Arabic s/ṣ, Russian e/ё), measure confusion in retrieval and NER.

- Dialectal variants: Model community transliterations (3, 7, 9 numerals for emphatics in Arabic chat).

- Name drift: Track temporal changes (e.g., political renamings) and ensure alias expansion is versioned.

- Script mixing: Evaluate on real code‑switch corpora (Hindi‑English in Latin script).

- Fairness slices: Audit error across ethnic and gendered names to detect disparate performance.

These stress tests uncover unknown unknowns—mismatches in assumptions about scripts, communities, and pipelines.

9. Design principles and a practical protocol

- Start with the source: Choose a scheme grounded in the source orthography; document irrecoverable losses.

- Make invertibility explicit: If you cannot guarantee it, provide a canonical form in the original script alongside any display form.

- Budget diacritics: Use the minimal set that restores critical contrasts; offer ASCII fallbacks for systems that require it.

- Encode segmentation: Mark glottal stops, gemination, and morpheme boundaries where they change meaning or retrieval.

- Separate layers: Canonical (for storage), Scholarly (rich diacritics), Display (user‑friendly), Search (expanded aliases).

- Version and localize: Allow communities to choose display conventions while preserving a single canonical backbone.

- Evaluate downstream: Optimize for improvements in retrieval, alignment, and learning—not for elegance alone.

- Be political on purpose: For exonyms/endonyms and contested forms, document rationale and provide alternatives.

10. How transliteration enables better translation

Pipeline view:

(a) Detect language + script → (b) Transliterate to bridge forms → (c) Retrieve comparable texts / candidate entities → (d) Align senses → (e) Translate.

Empirically, adding (b) increases recall of rare named entities and technical terms, which anchor sentence meaning. When proper names resolve correctly, downstream MT improves by constraining attention and reducing hallucinations.

11. A compact theory: transliteration as epistemic scaffolding

Let E be the set of expressions, R the set of referents, M the set of meanings. Translation maps Es→ME_s \to M then M→EtM \to E_t. Transliteration constructs a partial isomorphism ϕ:⟨Es, form⟩→⟨Et, form⟩\phi: \langle E_s,\ \text{form} \rangle \to \langle E_t,\ \text{form} \rangle that preserves indices of reference across scripts without committing to MM. This scaffold reduces uncertainty in both reference and sense disambiguation, enabling more reliable inferences in MM.

Corollary: In systems where form is the only available signal (indexes, catalogs, legal identifiers), transliteration is not merely helpful; it is the condition of possibility for understanding anything at all.

12. Implementation blueprint (operational detail)

- Specification: Write rules as left‑to‑right context‑sensitive mappings with priority ordering; explicitly handle word boundaries, diacritics, and assimilation.

- WFST construction: Compile rules into a cascade; add penalties for less common correspondences; compose with back‑transducer for diagnostics.

- Neural rescoring: Train a grapheme seq2seq (Transformers are sufficient) on aligned name pairs to rank n‑best outputs.

- Indexing strategy: Store canonical (original script), transliteration(s), and normalized ASCII. Use equivalence classes in databases.

- IR integration: Expand queries with n‑best transliterations and alias tables; re‑rank with contextual embeddings.

- Governance: Maintain a change log for mappings; include a community review process for contested names; provide per‑locale display policies.

13. Limitations and open problems

- True losslessness is impossible for underspecified scripts without diacritical augmentation or lexical context.

- Dialect and idiolect variation push against any single “standard.”

- Pinyin‑like systems for character‑based scripts require lexicons for back‑projection.

- Sociopolitical dynamics (renamings, endonyms) require ongoing curation rather than one‑time solutions.

14. Conclusion: Form is the first kindness

Transliteration does not tell us what a word means. It tells us how to carry the word—across alphabets, across systems, across worlds—so that meaning has a chance. In scholarship, computation, and daily life, the modest act of preserving form is the first kindness we extend to the other’s language. It is not translation; it is the condition that makes translation, retrieval, and understanding reliably possible.

Appendix A: Quick reference table

| Task | Best practice |

|---|---|

| Proper names in official records | Preserve original script + canonical transliteration; record display aliases. |

| Educational materials | Use diacritics to encode contrasts; mark long vowels and gemination. |

| Search & IR | Build n‑best transliteration lattices; unify canonical + display. |

| Knowledge graphs | Store script‑aware equivalence classes; audit for fragmentation. |

| Social platforms | Accept community transliterations; normalize for search while preserving user display. |

| Fairness | Report error by script/community; minimize diacritic loss in canonical forms. |

Appendix B: Illustrative examples (from context)

- Russian: Россия (Cyrillic) → Rossiya.

- Greek: φιλοσοφία → filosofía.

- Hebrew: חנוכה → Hanukkah/Chanukah.

- Arabic: مرحبًا → marḥaban/marhaban.

Appendix C: Minimal algorithm sketch (WFST)

- Tokenize source into graphemes (handle digraphs like щ).

- Apply ordered rules (e.g., ш → sh; context: ё → yo/ë).

- Insert diacritics where needed (ḥ, ṭ, ʿ).

- Generate n‑best variants for ambiguous cases.

- Back‑transliterate for sanity checks; log collisions.

- Expose three outputs: canonical (diacritics), display (friendly), search (expanded).

Final thesis restated

Transliteration is not translation—but it is the keystone that makes reference possible, learning feasible, computation effective, and justice in naming attainable. When we preserve form carefully, we create the conditions under which meaning can be found.

AI Assistance

ChatGPT 5Pro