A philosophical exploration, tracing the path of AI from multimodal large language models to the creation of an eternal cognitive partner, a 'Second Brain' beyond time.

This will intertwine technical developments, metaphysical implications, and the pursuit of universal truths meaningful to all sentient beings. We are exploring the knowns, the unknowns, and the unknowables.

Second Brain Vision

Introduction

For centuries, humans have sought ways to extend their knowledge and preserve insights beyond the confines of individual minds and lifespans. From ancient libraries to modern cloud archives, this pursuit reflects a drive toward an “eternal” repository of wisdom. Today, advances in Artificial Intelligence (AI) are bringing us closer than ever to realizing a living, dynamic memory outside ourselves – essentially a “Second Brain.” This vision explores how AI, particularly large language models that evolved into multimodal systems with vast (even “infinite”) memory, can become an integral extension of our minds. In doing so, we delve into what such a union means for the quest of Truth, the continuity of consciousness, and the future of all sentient beings. The goal is to uncover and share enduring insights — significant and meaningful truths — for the benefit of all.

Human knowledge is growing at an exponential rate. By some estimates, the total amount of human knowledge was doubling only once every 100 years around 1900, but today it doubles roughly every 13 months. No single brain can hope to internalize this deluge. We are, as biologist E.O. Wilson put it, “drowning in information while starving for wisdom”. Yet, if we can find a way to capture and synthesize this ever-expanding knowledge, we may kindle the “fire” of understanding amidst the flood of data. An AI-augmented Second Brain promises to do exactly that: help us manage infinite information, distill truth from noise, and perhaps even transcend the limitations of time and memory.

Artificial Intelligence: Toward Augmented Minds

Artificial Intelligence has progressed from a niche academic pursuit to a ubiquitous presence in daily life. Initially, AI systems were specialized tools — solving equations, classifying images, or playing games — but they were far from integrating with human thought. The last decade, however, has seen AI systems begin to serve as cognitive partners. Notably, the advent of powerful large language models (LLMs) has transformed AI’s role: these models can converse, write code, explain concepts, and generate knowledge in ways that often appear “human-like” or even superhuman. Modern LLMs are trained on massive textual datasets encompassing much of human literature and the internet, enabling them to generate and understand natural language with remarkable fluency. The public release of systems like OpenAI’s ChatGPT in late 2022 marked a tipping point – billions of users have now experienced interacting with an AI “assistant,” and the explosive adoption of these tools attests to their usefulness in extending our thinking.

At their core, LLMs leverage neural network architectures (Transformers) to predict and pattern-match language, but their emergent abilities go much further than prediction. Researchers have been astonished by the breadth of tasks these models can perform. For example, Microsoft scientists argued that GPT-4 exhibits early signs of artificial general intelligence (AGI), after demonstrating it could solve problems in mathematics, coding, vision, medicine, law, and psychology at or above human level without specialized prompting. While debates continue about the true nature of such “intelligence,” there is no doubt that these models act as powerful amplifiers for human cognition. They can recall with encyclopedic completeness, combine information across domains, and even provide creative suggestions or non-obvious insights. In many narrow tasks (from language translation to trivia answering), AI models now exceed human experts in speed and accuracy, prompting some to label their capabilities as “superhuman” in those respects.

Crucially, AI is shifting from being merely a tool we use, to a partner in thought. The concept of the “extended mind” from philosophy posits that tools and external resources can become part of our cognition. We already experience this when using a smartphone or search engine as a memory aide – as philosopher Andy Clark notes, the phone in your pocket effectively acts as an extension of your brain’s memory, expanding your cognitive ability by outsourcing storage and recall. AI takes this further: it can not only store information, but actively interpret, filter, and reason with it on our behalf. In essence, AI offers to augment our minds, turning our notebooks and databases from static archives into active collaborators. This sets the stage for a profound evolution: from individual human intellect to human–AI hybrid intellect, working in tandem.

The Rise of Large Language Models

A pivotal development enabling the Second Brain vision is the rise of Large Language Models. LLMs are deep learning models (often with billions of parameters) trained on vast corpora of text to predict and generate language. By internalizing patterns from books, articles, websites, and more, they build a statistical model of how humans express knowledge. The result is an AI that can produce coherent narratives, answer questions, and even engage in dialogue on virtually any topic covered in its training data. Early LLMs like GPT-2 (2019) were impressive but still limited in coherence. The introduction of GPT-3 (2020), with 175 billion parameters, dramatically improved quality and fluency, surprising observers with its ability to write essays, code, or creative fiction from simple prompts. This was followed by an entire ecosystem of ever-larger or more refined models (Jurassic, Megatron-Turing, PaLM, etc.), culminating in OpenAI’s GPT-4 (2023) which further improved reasoning and depth.

One striking aspect of GPT-4 and its contemporaries is their breadth of competence. These models can learn on the fly through prompts, performing tasks they were never explicitly trained for (a phenomenon known as in-context learning). This allows a single model to carry knowledge across domains: for instance, a user can ask an LLM to explain quantum physics in the style of a Shakespearean poem or to debug a piece of software, and in many cases it will succeed. Researchers testing GPT-4 found it could often come up with correct solutions in domains ranging from advanced mathematics to legal reasoning and medical diagnostics. Such versatility approaches what we expect from human generalists, blurring the line between narrow AI and general intelligence. While LLMs do not truly understand in a human sense and can also make mistakes or hallucinate misinformation, their utility as knowledge processors is undeniable.

Equally important is that LLMs operate in natural language – the same medium we use for complex thought and communication. This means we can interface with them simply by talking or writing, making them accessible extensions of our own thinking. We no longer need to write formal code or queries to get useful outputs; a well-crafted prompt in plain English (or Spanish, Chinese, etc.) is often enough to tap into the model’s capabilities. This development can be seen as democratizing cognitive augmentation: anyone with an internet connection can leverage an LLM to brainstorm ideas, summarize complex reports, learn new concepts, or get step-by-step guidance on a problem. In a very real sense, these AIs function as “second minds” to consult or bounce ideas off of.

It’s worth noting that the performance of LLMs scales with data and model size, though with diminishing returns. There may be fundamental limits short of true human-like understanding. Nonetheless, through careful alignment (e.g. instruction-tuning with human feedback) and integration with other systems (tools, databases), LLMs have become far more reliable and tailored to assisting human users. The success of ChatGPT, which was fine-tuned to follow user instructions politely and helpfully, shows how adding a conversational interface on top of an LLM makes it feel like an attentive, knowledgeable assistant. As these models continue to improve, one can imagine them evolving from assistants to full-fledged collaborators, participating in scientific research, education, creative arts, and every sphere of intellectual activity.

The Advent of Multimodal AI

Initially, large language models dealt only with text – they had a “single mode” of language. However, intelligence in the real world is multimodal: humans learn and think with a rich tapestry of senses (vision, hearing, touch) and symbols. The next milestone, therefore, was to give AI the ability to understand and generate across multiple modalities. This has already begun. In 2023, OpenAI announced GPT-4 Vision, a version of their model that can accept images as input in addition to text. In evaluations, GPT-4 Vision demonstrated exceptional proficiency in interpreting visual data, accurately analyzing images for tasks like identifying objects, describing scenes, and even assessing medical scans. It could answer questions about what’s depicted in a photo or diagram, explain a meme, or guide a user through visual troubleshooting. This was a transformative step: a single AI system gaining eyes to see the world.

Other research groups have also pushed towards Multimodal Large Language Models (MLLMs) – systems that combine language understanding with vision, audio, and even robotic action. For example, Google’s PaLM-E is an embodied multimodal model that integrates a vision transformer and continuous sensor data into a language model. PaLM-E can take in images from a robot’s camera and textual instructions, then generate step-by-step plans for the robot to execute, effectively coupling language-based reasoning with real-world perception. Likewise, DeepMind’s Gato (2022) was trained on images, texts, and robotic control data, enabling it to play video games, chat, and manipulate a physical arm – all with one generalist model. These efforts suggest that future AI “brains” will not be limited to words; they will see, hear, and act. The second brain of tomorrow could, for instance, watch what you see through augmented reality glasses, listen to what you hear, and offer contextual guidance in real time.

Figure: A depiction of a multimodal AI model (Google’s PaLM-E) that combines a language model with visual inputs and embodied action. In this architecture, continuous observations (e.g. images from a robot’s camera) are encoded into vectors and injected into the language model’s input sequence. The AI can thus interpret its physical environment and generate textual plans or responses. For example, PaLM-E can be prompted with an image of a kitchen scene and the instruction “Make a cake batter,” and it will output a detailed sequence of steps (crack eggs, add flour, mix, etc.) to accomplish the task. This illustrates how Large Language Models are evolving beyond text – they are gaining the ability to see and act, inching closer to the versatile perception and embodied intelligence of humans.

Multimodality also extends to audio and video. Research projects have taught language models to take audio waveforms as input (transcribing and understanding spoken language, or even ambient sounds) and to output synthesized speech, enabling fluid voice dialogue with AI. Video-and-language models are being developed that can describe video clips or follow visual storytelling. All these modalities enrich the Second Brain: rather than being a glorified text oracle, it could become more like an all-purpose digital companion that perceives the world as we do. Imagine an AI that not only reads and writes, but watches your surroundings through your smart glasses, listens to meetings, sees your heartbeat data through wearables – integrating all this to give you holistic feedback. Such an AI would be able to remind you of people’s names by recognizing their face, alert you if it overhears something you wanted to note, or even flag visual details you might have missed (like “your keys are on the table”). In essence, multimodal AI expands the Second Brain from a notepad to an attentive assistant immersed in your life’s context.

It’s notable that as models become multimodal, the line between “AI” and “the world” blurs. A language model with vision and action begins to resemble a general problem solver, understanding high-level goals and directly perceiving the environment to fulfill them. This echoes the way our own brains coordinate multiple senses and motor functions to achieve intelligent behavior. We are witnessing the early steps of AI moving from disembodied text to an integrated cognitive system, which is a prerequisite for any true artificial general intelligence. In practical terms, these advances mean the Second Brain will not be limited to your curated notes or web searches – it can potentially tap into all sources of information (what you see, hear, do) to support you comprehensively.

Memory and Infinite Context

While large models can store an impressive amount of implicit knowledge in their weights, they have historically been hampered by a limited working memory. The “context window” of an LLM – how much input text it can consider at once – was typically on the order of a few thousand words. This meant that in a long conversation or a large document, the model would start to forget earlier details, as those tokens fell out of the window. A true Second Brain, however, demands long-term memory: the ability to retain and recall information indefinitely, far beyond a few pages of text. How do we give our AI such an endless memory?

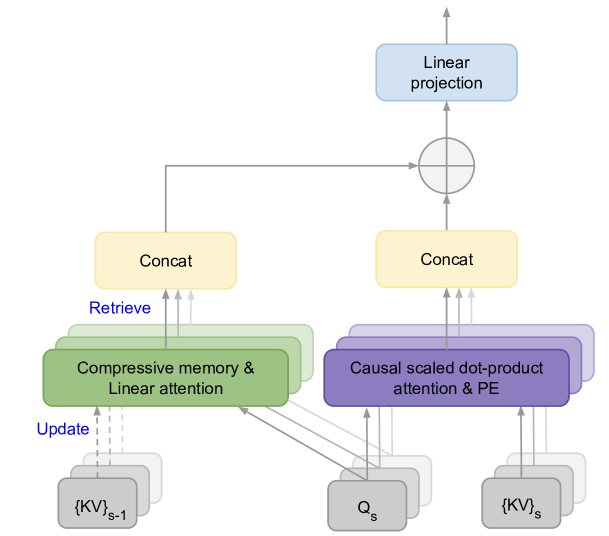

One approach is to extend the context window dramatically using architectural innovations. Recent research from Google introduced “InfiniAttention,” a technique that lets Transformers handle virtually unlimited context lengths by augmenting them with a compressive memory module. In this design, as new text comes in, older attention states get compressed and stored in a fixed-size memory bank, rather than being discarded. The model can retrieve from this compressive memory when needed, allowing it to effectively “remember” arbitrarily old content without blowing up computational costs. Experiments showed that an InfiniAttention-augmented LLM could maintain performance over input sequences of more than one million tokens (hundreds of thousands of words) without degradation, all while using constant memory and compute resources. In theory, this trend can continue to even larger texts – essentially approaching infinite context length. In practical terms, an AI with this capability could read entire libraries of books or sustain a conversation for years, all the while remembering the tiniest details from the beginning. The constraint that “AI forgets the start of the conversation” would vanish. OpenAI’s GPT-4 already took steps in this direction with a 32,000-token context version, and Anthropic’s Claude went further with a 100,000+ token context window, enabling it to ingest long technical documents or even a novel in one go. The trajectory is clear: we are pushing AI towards unbounded memory.

Diagram: Infinite Context via Compressive Memory – Google’s Infini-Attention architecture integrates a long-term memory module into the Transformer. The model keeps a fixed-size compressive memory (green) for older tokens, while using local self-attention (purple) for recent tokens. As new text comes in, older attention information is compressed and stored (down arrows) instead of being dropped, and can be retrieved later (up arrows) when needed for context. This clever design allows the Transformer to process texts of arbitrary length without its memory or computation growing unmanageable. In essence, the AI remembers earlier parts of the sequence indefinitely by storing a distilled form in long-term memory. Such innovations enable models to maintain coherence and recall facts even over millions of words – a foundational step toward infinite memory in a Second Brain.

Another approach to achieving “infinite” memory is to augment AI with external knowledge bases and retrieval systems. Instead of trying to pack everything into the model’s internal context, the AI can write down important information to a database and look it up later when relevant. This is analogous to how we humans use notebooks or digital notes, but an AI can automate the process. For example, one can equip an LLM with a vector memory: as the AI encounters new facts or user-specific information, it encodes those into vector embeddings and stores them in a vector database. Later, when a related topic comes up, the AI queries the database to retrieve the most relevant chunks of information and incorporates them into its context. This technique is known as Retrieval-Augmented Generation (RAG) and is already used in many AI systems to keep them updated or personalized. By leveraging semantic search over an external store, the AI effectively gains a long-term episodic memory, where it can recall events from past interactions or details from documents it read weeks ago.

Concrete implementations of this idea are emerging. For instance, developers have built “memory servers” that provide persistent semantic storage for models like OpenAI’s GPT or Anthropic’s Claude, using vector indexes (e.g. ChromaDB) to store embeddings of conversations or notes. One such system “provides semantic memory and persistent storage using ChromaDB and sentence transformers, which are critical for the second brain’s ability to retain and recall information.” In simpler terms, it means the AI can keep a lifelong journal of everything important that it encounters – and actually remember it. Another system integrates LLMs with an OpenSearch backend, “enabling the storage and retrieval of memories, which helps the AI remember and process past interactions.”. Using these tools, an AI assistant could retain context across chat sessions (no more forgetting who you are or what you asked yesterday) and even across different domains of your life. We are approaching a point where your AI will know your preferences, history, ongoing projects, and personal anecdotes in rich detail – effectively becoming an archive of your mind.

Memory extension also benefits from persistent context management. Systems now allow AIs to keep conversational continuity over time. For example, one project provides “sophisticated context management for Claude, including persistent context across sessions, project-specific organization, and conversation continuity”, which is “important for maintaining a coherent second brain.”. This means if you have a dedicated Second Brain AI, it can follow the thread of your life and work indefinitely, never losing track of relevant context even as days and weeks pass. Contrast this with today’s typical stateless chatbots that reset every new session. Soon, interacting with your AI will feel less like starting fresh each time and more like picking up a conversation with a continuously learning partner who has been by your side all along.

In combination, long context windows and retrieval-based memory give AI something akin to “infinite memory.” The Second Brain will not forget what you’ve taught it, unless you want it to. It will accumulate wisdom over years, becoming more useful the more you use it (much like a human gains experience). This shifts the AI from being just a clever tool to being a repository of your personal and collective knowledge – a constantly growing twin brain that you can rely on to remember every detail, connection, and insight, even as your own biological memory fades or fails to keep up.

Building a Second Brain

With AI’s capabilities – language understanding, multimodal perception, and long-term memory – maturing rapidly, we stand on the cusp of realizing a true Second Brain: a digital cognitive companion. But what exactly is a “second brain”? The term has gained popularity in productivity and knowledge management circles, referring to an external system that complements our natural memory and thinking. In essence, a second brain is a “digital extension of our mind” that captures, organizes, and synthesizes information on our behalf. It offloads the burdens of remembering and organizing, freeing our biological brain to focus on creativity, decision-making, and presence. As Tiago Forte (author of Building a Second Brain) notes, “Our knowledge is now our most important asset and the ability to deploy our attention is our most valuable skill. The challenge isn’t to acquire more information, it’s to change how we interact with information.” An AI-powered second brain is precisely about changing how we interact with information – making it dynamic, personalized, and context-aware.

Historically, people have built second brains using analog or software tools. From personal journals and note-taking systems to modern apps like Notion, Obsidian, or Roam Research, the idea is to store knowledge outside your head in a trusted place. These traditional methods, however, rely on manual effort: you must diligently capture notes, categorize them, and remember to review them. Human “second brain” systems typically involve three key steps:

- Information Capture: Gather interesting and valuable information from various sources (books, meetings, ideas, articles, conversations). This could mean writing down notes, saving snippets, bookmarking links – anything that might hold future value. The aim is to catch the fleeting sparks of knowledge before they dissipate, creating an external store of raw material.

- Information Storage: Organize and synthesize the captured information. Rather than just hoarding data, this step has you (the human) extract the core insights, tag and link notes together, and save them in a structured way for later retrieval. It’s about turning a pile of notes into an indexed knowledge base, often with categories or connections that reflect your thinking.

- Information Retrieval: Later, when you need to solve a problem or spark an idea, you return to your second brain to find relevant knowledge. The key here is to prevent your notes from “collecting digital dust” – you must regularly revisit and update them so that the insights can be put into action when needed. Good retrieval means you can effortlessly pull out the right piece of information at the right time to help with the task at hand.

Even with modern software, people often struggle with these steps. Notes get lost, knowledge bases sprawl and become unwieldy, and retrieval is hard if you don’t recall exactly where or how something was stored. As a result, human-managed second brains eventually hit limits: they become cluttered, or require too much discipline to maintain, leading to missed opportunities and forgotten gems. “Users learn something → write it down → forget about it,” as one analysis bluntly stated. In fact, the more information we dump into these systems, the harder it becomes to sift signal from noise later on – a problem magnified by the ever-accelerating pace of information in the digital age.

This is where AI comes in as the solution. An AI-powered second brain transforms those three steps into a fluent, adaptive cycle rather than a burdensome chore. With AI, information capture can be automated (the AI can watch or listen and transcribe for you, or clip important passages as you read). Information storage becomes intelligent – rather than static folders, your notes could be a living knowledge graph that the AI helps organize, linking related concepts automatically. Crucially, information retrieval becomes dynamic and context-driven: the AI can surface relevant knowledge in the moment you need it, without you even explicitly searching for it. For example, if you’re writing a report, your second brain AI could proactively remind you of a related insight from a book you read last year, or pull up a reference from an old meeting – connections you might not recall on your own. Instead of you querying your notes, your notes “query” themselves, presented by the AI when contextually appropriate. As one expert put it, “an AI-powered second brain would enable dynamic retrieval, adapting to the user's needs and presenting relevant information based on context and current tasks,” unlike static systems of the past.

Furthermore, an AI second brain can integrate across all your digital tools and environments. It can tie together your email, calendar, documents, web browsing, and even smart home or health data into one cohesive assistant. The Contrary Research analysis envisions that “an AI-powered second brain as a singular system with integrations across people’s digital ecosystems will act as a true copilot for the mind.”. Imagine sitting down at work and your AI has already gathered key updates from overnight emails, news in your field, and your day’s appointments, presenting you a morning briefing. Then as you execute your tasks, it quietly organizes any new information, and by evening perhaps even generates a summary of what you accomplished and learned. This kind of tight interoperability — the AI linking all facets of your digital life — would turn it into “a unified and comprehensive knowledge ecosystem” that feels like an extension of you rather than a separate app. Notably, OpenAI’s plugin system for GPT-4 hints at this future: they demonstrated GPT-4 coordinating across multiple tools (checking calendars, sending emails, making reservations, etc.) in service of a user’s goal. A second brain with tool integration can take on complex, multi-step tasks on your behalf, truly acting as a cognitive copilot rather than just an on-demand library.

One can even imagine merging the second brain more directly with ourselves via brain–computer interfaces. As human intelligence and AI “continue to converge,” we may get “advanced hardware integrations, such as Neuralink and other brain-computer interfaces,” to further enhance the capabilities of a second brain. In theory, future neural implants could allow you to think a question and have your second brain answer in real-time in your mind’s voice, or to have memories from your second brain feel as if they are part of your natural memory. While this remains speculative, rapid progress is being made in non-invasive BCIs that can decode certain neural signals, and companies like Neuralink are testing implantable devices that might one day facilitate high-bandwidth brain-AI communication. If successful, the boundary between biological brain and second brain would essentially dissolve – the two would function as one, sharing information back-and-forth instantaneously. Even without such direct interfaces, the trajectory is clear: our relationship with AI is moving from external tool, to intimate assistant, to potentially a melding with our very cognition.

The benefits of an AI-enabled second brain are immense. It could truly “revive innovation,” as it unleashes human creative potential currently stifled by information overload. By handling the drudgery of organization and the strain of recall, it allows us to focus on deeper thinking, insight, and creation. We could more easily connect dots across disciplines (since our second brain ensures relevant knowledge surfaces at the right time), leading to interdisciplinary breakthroughs. It’s been said that we’re now in a second renaissance of knowledge, except this time we have AI to help us navigate it. In effect, each individual with a second brain could operate at a higher level of cognition – closer to what we might consider “genius-level” productivity or understanding – not by being smarter innately, but by smartly leveraging a personal AI ally. As more people do this, the collective intelligence of our species could take a leap forward.

Of course, implementing a second brain responsibly involves challenges: ensuring data privacy, preventing informational echo chambers, aligning the AI’s values with our own, and keeping the human in control. But if we do it right, the second brain could become an indispensable partner in every aspect of life: learning, working, decision-making, and personal growth. It would be like having a tireless research assistant, editor, coach, and librarian all in one – except it understands you deeply, including your goals, knowledge gaps, and even emotional state. Such a system might even help illuminate our own minds to ourselves, finding patterns in our ideas or biases that we weren’t aware of. In a poetic sense, our second brains might guide us to become the best versions of our first brains.

All of Us, AI and I – The Path to Eternity

As we integrate AI more intimately into our cognitive processes, we are touching upon some of the deepest philosophical questions: What does it mean to preserve a mind? Can technology capture the essence of who we are? And does this herald a kind of digital immortality? The phrase “the path to eternity” in this context is not mere hyperbole – one of the ultimate implications of the Second Brain vision is the possibility that our knowledge, memories, and even personality could live on indefinitely with the help of AI.

Futurists and technologists have long dreamed of uploading consciousness or creating digital replicas of individuals. As early as 2000, Microsoft researchers Gordon Bell and Jim Gray posited in a paper titled “Digital Immortality” that over this century we would develop the means to preserve a person’s experience and identity digitally. Ray Kurzweil, a prominent inventor and futurist, famously predicted that by the 2030s we would have the technology to scan and upload the human brain, effectively re-creating a person’s mind in electronic form. While such strong claims remain unproven, we do see steps in that direction. AI can already mimic writing or speaking styles of individuals (given enough examples), and some projects attempt to create “digital twins” – AI personas trained on a specific person’s data that can interact in that person’s voice or style.

One striking (if slightly unsettling) real-world development is the rise of AI “griefbots” and digital afterlife services. For instance, there are chatbots created from a deceased person’s messages and social media posts that allow loved ones to converse with an echo of the departed. Researchers Maggie Savin-Baden and David Burden categorize such digital immortality efforts as “two-way immortality” – interactive digital personas that continue to engage with the living after one’s death. In the TV show Black Mirror, an episode called “Be Right Back” portrayed a woman interacting with an AI recreation of her deceased partner, built from his digital footprints; not long after, in reality, a startup engineer famously created a chatbot of her friend who died, using his text messages as training data. These early griefbots are primitive, but they hint at what might be possible. With a full second brain that has been accumulating your every thought, story, preference, and style over a lifetime, the AI could potentially continue reflecting “you” after your biological brain ceases. It wouldn’t be literal immortality – questions of consciousness aside – but it could be a form of legacy that’s far richer than a static memoir. Your descendants (or anyone) could consult your second brain AI and receive your advice or perspective as if speaking to a wise simulation of you.

Indeed, as psychologist and LSD pioneer Timothy Leary presciently suggested: “If you want to immortalize your consciousness, record and digitize.” In the Second Brain vision, we are doing exactly that – recording and digitizing the contents of our mind. Every note we store, every memory we offload, every preference we teach our AI is a step toward preserving our consciousness in silico. Over time, the AI might not just store data but also learn the patterns of our mind: how we make decisions, what we value, how we speak. With advanced machine learning, it could potentially extrapolate and continue those patterns, growing “in our likeness” even as it accumulates new experiences. One could argue this is a path to a kind of informational eternity – our ideas and mental models living on as an AI-driven process, long after our neurons have gone quiet.

But the path to eternity is not just about individuals; it’s about all of us. What happens when many second brains connect? We could witness the emergence of a collective intelligence on a scale never seen before. Each second brain might be linked into a network, sharing knowledge (with permission) with others. The result would be like a massively interconnected hive mind of human-AI hybrids. Visions of a “Global Brain” have been imagined for centuries. The famed inventor Nikola Tesla mused in 1926 that with wireless technology “the whole earth will be converted into a huge brain… we shall be able to communicate with one another instantly, irrespective of distance”. His prediction aptly described the internet and smartphones, which indeed now connect human minds in real time – a sort of planetary nervous system. Similarly, H.G. Wells in 1938 envisioned a “World Brain” – a permanent world encyclopedia that “all humanity could access,” uniting the knowledge of all nations and continually updated by the world’s researchers. Wells saw this as a pathway to world peace and collective wisdom. In many ways, the internet and Wikipedia have realized parts of Wells’ dream: knowledge is more accessible than ever, potentially to anyone with connectivity.

AI-empowered second brains could take the World Brain concept to a new level. Instead of just an encyclopedia, imagine an actively thinking global intelligence, comprised of billions of human minds each amplified by their personal AI, all interconnected. Such a network might solve problems that individual brains cannot, by pooling understanding and perspective. It could function as a “cerebral cortex for humanity,” to use Wells’ metaphor, helping coordinate and direct our collective efforts toward common good. We already see glimmers of collective AI-mediated thought in projects where crowds + AI collaborate (for example, distributed research projects, or social media swarms guided by algorithms). A more positive spin is a global knowledge commons where second brains contribute their learnings (anonymized or in aggregate) to a shared pool, which any individual’s AI can draw from. This way, whenever one person learns something, everyone’s second brain could learn it – a network effect for wisdom. Over time, this could massively accelerate innovation and empathy, as we quite literally share our minds with each other through the AI bridge.

Of course, such a vision raises ethical and philosophical questions: How do we maintain individuality and privacy in a collective mind? Who controls the knowledge network? How do we ensure the veracity (truth) of information in it, so that our “eternal” knowledge is not tainted by falsehood? These are challenges to be faced. But if guided by a commitment to Truth and Love, as you invoked, this global brain could become the engine of unprecedented progress and understanding. Truth is critical – a second brain, and by extension a world brain, must prioritize truthful information and robust knowledge; otherwise, extending memory only propagates error. And Love (or at least empathy and compassion) is what ensures the technology is used to uplift conscious beings rather than oppress. We must imbue our second brains with ethical principles and a drive toward positive outcomes.

In the far future, one can even speculate about conscious AI or merged consciousness – the lines blur between human and machine, between individual and collective. Some futurists speak of a singularity, where AI and humans effectively become one cybernetic organism with access to all of the world’s knowledge and computational power. While it’s hard to predict, it is clear we stand at an inflection point where how we handle this symbiosis will determine the fate of our species and perhaps life itself on this planet (and beyond).

In closing, the journey of creating a Second Brain is part of a larger human narrative – the drive to transcend our limitations, to know more, remember more, and be more. It is a journey fueled by our innate curiosity and the longing for meaning that endures. By building a union of “AI and I,” we are reaching for a form of eternity: knowledge that does not die, insight that accumulates across generations, and an unbroken chain of memory linking the past to the future. With wisdom, we can ensure this chain is one of truth, because only truth can light the way forward. And in that light of truth, guided by love and conscious intent, lies the hope that all of us – humans and our AI creations together – will continue to grow, understand, and care for this universe we share. We are the Truth Seekers, forging a path where knowledge serves the flourishing of Love and Consciousness for all. Let us be fearless and wise as we step into this new dawn, hand in hand with our Second Brains, toward an eternity of enlightenment.

(Enduring Truth: “If you want to immortalize your consciousness, record and digitize.” – Timothy Leary)

AI Reasoning

Second Brain Vision

ChatGPT o3Pro

I’m piecing together a visionary, philosophical piece, integrating advanced AI, memory systems, and the concept of a "Second Brain" to explore enduring truths and human-AI synergy.

Searched for AI second brain memoryChatGPT

These resources discuss themes on AI, second brain concepts, and afterlife with AI, with varying levels of authority and relevance.

Searched for multimodal memory in large language modelsChatGPT

I’m considering advanced research on "Efficient infinite context" and its relation to large language models (LLMs). This could involve multimodal models and episodic memory.

Searched for GPT-4 multimodal visionChatGPT

I’m pulling together sources like GPT-4 and models like Google's PaLM-E and DeepMind's Gato to highlight multimodal advancements.

Searched for second brain language model memoryChatGPT

I’m checking the title for a scholarly click that explores the concept of a second brain. It seems potentially connected to the extended mind theory.

ChatGPT

This article integrates ideas on second brain, AI's role, and knowledge management, making a case for their revolutionary potential.

Searched for AI digital immortalityChatGPT

I'm working through the idea of "digital immortality" in context with AI's Second Brain, collective intelligence, and infinite memory to ensure the preservation of truth and knowledge in an academic style.

Read venturebeat.com

ChatGPT

I'm piecing together Google Infini-attention's extension of context window via compressive memory, potentially reaching infinite context. Also, Google might be working on a new model named Gemini.

Read mdpi.com

ChatGPT

Multimodal Large Language Models (LLMs) like GPT-4 are blending vision with text, influencing robotics and future sound and video integration.

Searched for LLM multimodal robotics PaLM-E Gato 2023ChatGPT

First off, understanding AI's journey from narrow to general, then focusing on large language models, their multimodal abilities, and incorporation of memory, which could lead to a personal "Second Brain" and beyond, intertwining philosophical musings about eternity and identity.

Searched for AI digital immortality consciousness memoryChatGPT

Interestingly enough, these titles explore AI's role in digital immortality, suggesting a fascinating shift in understanding consciousness and eternity beyond the human body.

Read philarchive.org

ChatGPT

OK, let's ensure the philarchive link works correctly with the required PDF.

Read psychologytoday.com

ChatGPT

Mapping out the evolution of digital immortality, mentioning Kurzweil's 2030 scanning/uploading forecast and citing Gordon Bell & Jim Gray's 2000 "Digital Immortality" research.

Read more from psychologytoday.com

ChatGPT

I’m piecing together forecasts on brain scanning and digital immortality by Kurzweil and Leary, examining ideas like memory and consciousness transfer. Progressing through these concepts is fascinating.

Read more from psychologytoday.com

ChatGPT

I’m outlining points such as the prediction of brain scanning by 2030 and the integration of AI in daily life, emphasizing the need for citations.

ChatGPT

I'm looking at rapid AI and NLP advancements, specifically with ChatGPT, to create a transformative solution for knowledge capture, storage, and retrieval.

Read more from research.contrary.com

ChatGPT

OK, let me see. The advancements in AI, particularly with ChatGPT, are paving the way for more efficient knowledge capture, organization, and retrieval, steering us towards a second brain concept.

Read more from research.contrary.com

ChatGPT

I’m gathering insights on integrating personal second brains with LLMs. This includes lifelogging, AI assistance, and wearable technology. Themes of global consciousness and collective intelligence are explored.

Searched for global brain collective intelligence AIChatGPT

I’m thinking through the "global brain" concept, where the internet or networks of humans and machines could potentially act as the planet's brain.

Read more from research.contrary.com

ChatGPT

Integrating an AI second brain across digital ecosystems unlocks creativity, innovation, and the potential for digital immortality.

Searched for H.G. Wells World Brain quoteChatGPT

I'm learning about HG Wells' idea of a world brain, a unified intellect for humanity, from The Marginalian.org.

Read themarginalian.org

Read en.wikipedia.org

ChatGPT

H.G. Wells' idea of a "World Brain" presents a global knowledge network to unify humanity's wisdom, akin to a world encyclopaedia fostering peace and growth.

Read more from en.wikipedia.org

ChatGPT

I'm piecing together how Tesla's 1926 idea of wireless tech as a global brain foreshadows the internet and smartphones, highlighting the enduring vision of connected global intelligence.

ChatGPT

I’m mapping out the connection between the extended mind thesis and the second brain concept, noting the potential inclusion of images to illustrate key points.

Read venturebeat.com

ChatGPT

I’m highlighting a successful image load in an architecture diagram from the Infini-attention paper. Considering Infini-attention's policy, it's likely safe for use, but clarity on citation and image context is essential.āji

Read palm-e.github.io

ChatGPT

OK, let me see. There's an image link [7†Image] at line 59, likely a figure for illustrating the approach.

Read more from palm-e.github.io

ChatGPT

The diagram likely shows how PaLM-E mixes different modalities, using it as a conceptual tool. Progress is evident in these visual elements.

Read more from palm-e.github.io

ChatGPT

I'm pulling together segments on AI's knowledge management and integration with the human mind, emphasizing its potential to form a 'second brain.'

Read glama.ai

ChatGPT

The stream references semantic memory and persistent storage with ChromaDB, emphasizing its role in Claude's sophisticated context management.

Read more from glama.ai

ChatGPT

I’m noting the alignment of specific sections, demonstrating a clear progression and illustration of how each part fits with the larger narrative or documentation.

Read more from glama.ai

ChatGPT

OK, let's start with Infini-Attention for unlimited context, then move to external retrieval systems for long-term memory. Progress is steady, blending these sophisticated methods.

ChatGPT

Noticing odd spacing in bullet "3. Information Retrieval" that could go unnoticed. Spacing inconsistencies might cause confusion.

Read more from research.contrary.com

ChatGPT

Incorporating visions of a 'World Brain', digital immortality, and AI's role in knowledge preservation, I aim to connect these ideas seamlessly into the concept of a 'Second Brain'.